Solve Equations Of Type A*X = B Using dgtsv_ or sgtsv_

dgtsv_() expects the lower/main/upper diagonal of the tri-diagonal

matrix as separate arguments. You can pass the address of variable arrays with &.

All integer parameters are addresses of __CLPK_integer aka Int32

variables.

The right-hand side vector b is overwritten with the solution x to

the A x = b equation. The three vectors describing A are overwritten

as well, so you might want to make copies of the original data.

Example:

import Swift

import Accelerate

var mainDiagA = [ 1.0, 4.0, 4.0, 4.0, 4.0, 4.0, 4.0, 4.0, 4.0, 1.0 ]

var upperDiagA = [ 0.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0 ]

var lowerDiagA = [ 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 0.0 ]

var b = [0.0, -15.0, -15.0, -3.0, -3.0, 45.0, -12.0, -6.0, 0.0, 0.0 ]

var n = Int32(mainDiagA.count) // Order of matrix A

var nrhs = Int32(1) // Number of right-hand sides

var info = Int32(0) // Result code

dgtsv_(&n, &nrhs, &lowerDiagA, &mainDiagA, &upperDiagA, &b, &n, &info)

if info == 0 { // success

print(b)

// [0.0, -2.9185349611542732, -3.3258601553829075, 1.2219755826859044, -4.5620421753607099, 14.026193118756938, -6.5427302996670367, 0.14472807991120964, -0.036182019977802411, 0.0]

}

QR-Factorization in least square sense to solve A * w = b

I was able to find a solution to my problem and I want to share it with you.

The problem was not a programming error, but the paper provided an incorrect matrix (the Ai matrix) which is needed to solve the linear system of equations.

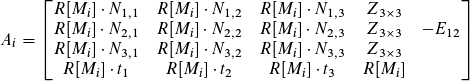

I tried to extract a system of linear equations from M*X - Y*N = 0 by myself using the characteristics of homogeneous transformation matrices and rotation matrices. I came up with following solution:

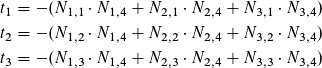

where

The vector bi provided in the paper is fine.

Since Prof. Ernst teaches at my university and I'm taking a course with him, I will try to make him aware of the mistake.

Make all values of an array negative or change sign

If you need a fast solution for large arrays then you can use cblas_dscal() ("scalar multiplication, double precision") from the Accelerate framework:

import Accelerate

var x = [1.0, 2.0, -3.0, 4.0, -5.0]

cblas_dscal(Int32(x.count), -1.0, &x, 1)

print(x) // [-1.0, -2.0, 3.0, -4.0, 5.0]

Remark: There is also cblas_sscal() for Float arrays ("single precision").

Performance comparison: Here is a quite simple performance comparison

of the various methods:

let N = 1_000_000 // # of array elements

let R = 100 // # of repetitions

func test1() {

var x = (0..<N).map { _ in drand48() }

let start = Date()

for _ in 0..<R {

for i in x.indices { x[i] = -x[i] }

}

let time = Date().timeIntervalSince(start)

print("x[i] = -x[i] ", time)

}

func test2() {

var x = (0..<N).map { _ in drand48() }

let start = Date()

for _ in 0..<R {

for i in x.indices { x[i].negate() }

}

let time = Date().timeIntervalSince(start)

print("x[i].negate() ", time)

}

func test3() {

var x = (0..<N).map { _ in drand48() }

let start = Date()

for _ in 0..<R {

x = x.map { -$0 }

}

let time = Date().timeIntervalSince(start)

print("x.map { -$0 } ", time)

}

func test4() {

var x = (0..<N).map { _ in drand48() }

let start = Date()

for _ in 0..<R {

cblas_dscal(Int32(x.count), -1.0, &x, 1)

}

let time = Date().timeIntervalSince(start)

print("cblas_dscal ", time)

}

test1()

test2()

test3()

test4()

Results (on a 3.5 GHz Intel iMac, compiled and run in Release configuration:)

x[i] = -x[i] 0.0492849946022034

x[i].negate() 0.0635690093040466

x.map { -$0 } 0.285757005214691

cblas_dscal 0.0506410002708435

As it turns out, cblas_dscal() has about the same speed as

an explicit loop with x[i] = -x[i] or x[i].negate(), but is

significantly faster than the map() method.

Of course the results may be different on a different hardware, or with

other array sizes.

As usual, start with the method which you are most familiar with and

look for faster methods if it turns out to be performance-critical.

Which algorithm do DGGEV or DSYGV Eigen solvers in LAPACK implement? Is it 'QZ' algorithm which MATLAB uses?

MATLAB used to have a list of LAPACK rountine used by eig function in its documentation, but decided to remove it for some reason.

Here is a screenshot of the table from the archived docs of R2009a:

I can't guarantee things haven't changed since then.

EDIT:

The doc page of the qz function had a similar table of LAPACK rountines:

For reference, you could also look at how other scientific frameworks implement this function:

Octave: Has the equivalent

qzfunction. Here is the source code: http://hg.octave.org/octave/file/tip/libinterp/corefcn/qz.ccSciPy: Also implements the generalized Schur decomposition. You can see it also ends up calling DGGES from LAPACK.

Julia: Here is a reference to Julia's implementation of Schur decomposition: https://github.com/JuliaLang/julia/blob/master/base/linalg/factorization.jl#L697, https://github.com/JuliaLang/julia/blob/master/base/linalg/lapack.jl#L3358

R: Here is an equivalent R package for generalized eigenvalue problem. You can inspect the source code: http://cran.r-project.org/web/packages/geigen/index.html

Solving a Tridiagonal Matrix using Eigen package in C++

The best you can do is to implement the Thomas algorithm yourself. Nothing can beat the speed of that. The algorithm is so simple, that nor Eigen nor BLAS will beat your hand-written code. In case you have to solve a series of matrices, the procedure is very well vectorizable.

https://en.wikipedia.org/wiki/Tridiagonal_matrix_algorithm

If you want to stick with standard libraries, the BLAS DGTSV (double precision) or SGTSV (single precision) is probably the best solution.

Related Topics

Why Do I Have to Unwrap a Weak Self

How to Disable a Button of a Nstoolbar of Macos X in Swift

Upload Multiple Images to Ftp Server in iOS

Swift Textview Align with English and Arabic Languages

Addperiodictimeobserver Is Not Called Every Millisecond

Macos App Sandboxing - Read Access to Referenced Files from Parsed Xml

How to Navigate from Initial UIviewcontroller to UIsplitviewcontroller in Swift

Literal Numbers in Floatingpoint Protocol

Synchronous Request Using Alamofire

Swift Package: Ld: Warning: Dylib Was Built for Newer Macos Version (11.0) Than Being Linked (10.15)

Lldb for Swift: Access Computed Property or Perform Function Call in Type Summary Python Script

Swift How to Make List Start at The Top with No Padding

Parse Migration Error to Mlabs and Heroku

How to Implement Applescript Support in a Swift Macos App

Swift Auto Completion Not Working in Xcode 6 Beta

Swift Unsafemutablepointer & Unsafemutablepointer<Unsafepointer<Sometype>>