how to continuously display a file of its last several lines of contents

This will update every 2 seconds rather than whenever data is written to the file, but perhaps that's adequate:

watch 'head -n 2 job.sta; tail job.sta'

Get last n lines or bytes of a huge file in Windows (like Unix's tail). Avoid time consuming options

How about this (reads last 8 bytes for demo):

$fpath = "C:\10GBfile.dat"

$fs = [IO.File]::OpenRead($fpath)

$fs.Seek(-8, 'End') | Out-Null

for ($i = 0; $i -lt 8; $i++)

{

$fs.ReadByte()

}

UPDATE. To interpret bytes as string (but be sure to select correct encoding - here UTF8 is used):

$N = 8

$fpath = "C:\10GBfile.dat"

$fs = [IO.File]::OpenRead($fpath)

$fs.Seek(-$N, [System.IO.SeekOrigin]::End) | Out-Null

$buffer = new-object Byte[] $N

$fs.Read($buffer, 0, $N) | Out-Null

$fs.Close()

[System.Text.Encoding]::UTF8.GetString($buffer)

UPDATE 2. To read last M lines, we'll be reading the file by portions until there are more than M newline char sequences in the result:

$M = 3

$fpath = "C:\10GBfile.dat"

$result = ""

$seq = "`r`n"

$buffer_size = 10

$buffer = new-object Byte[] $buffer_size

$fs = [IO.File]::OpenRead($fpath)

while (([regex]::Matches($result, $seq)).Count -lt $M)

{

$fs.Seek(-($result.Length + $buffer_size), [System.IO.SeekOrigin]::End) | Out-Null

$fs.Read($buffer, 0, $buffer_size) | Out-Null

$result = [System.Text.Encoding]::UTF8.GetString($buffer) + $result

}

$fs.Close()

($result -split $seq) | Select -Last $M

Try playing with bigger $buffer_size - this ideally is equal to expected average line length to make fewer disk operations. Also pay attention to $seq - this could be \r\n or just \n.

This is very dirty code without any error handling and optimizations.

continuously print the last line of a file Linux termin

Use the UNIX command "tail" with the -f option. That will continuously print out contents from the file to the terminal as it is added to the file.

Example:

tail -f emptyfile

You can terminate the tail process by typing Ctrl + C.

What is the best way to read last lines (i.e. tail ) from a file using PHP?

Methods overview

Searching on the internet, I came across different solutions. I can group them

in three approaches:

- naive ones that use

file()PHP function; - cheating ones that runs

tailcommand on the system; - mighty ones that happily jump around an opened file using

fseek().

I ended up choosing (or writing) five solutions, a naive one, a cheating one

and three mighty ones.

- The most concise naive solution,

using built-in array functions. - The only possible solution based on

tailcommand, which has

a little big problem: it does not run iftailis not available, i.e. on

non-Unix (Windows) or on restricted environments that don't allow system

functions. - The solution in which single bytes are read from the end of file searching

for (and counting) new-line characters, found here. - The multi-byte buffered solution optimized for large files, found

here. - A slightly modified version of solution #4 in which buffer length is

dynamic, decided according to the number of lines to retrieve.

All solutions work. In the sense that they return the expected result from

any file and for any number of lines we ask for (except for solution #1, that can

break PHP memory limits in case of large files, returning nothing). But which one

is better?

Performance tests

To answer the question I run tests. That's how these thing are done, isn't it?

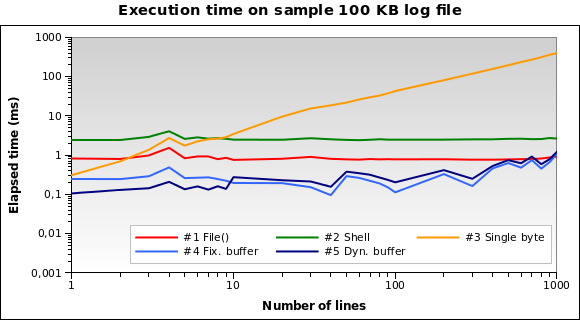

I prepared a sample 100 KB file joining together different files found in

my /var/log directory. Then I wrote a PHP script that uses each one of the

five solutions to retrieve 1, 2, .., 10, 20, ... 100, 200, ..., 1000 lines

from the end of the file. Each single test is repeated ten times (that's

something like 5 × 28 × 10 = 1400 tests), measuring average elapsed

time in microseconds.

I run the script on my local development machine (Xubuntu 12.04,

PHP 5.3.10, 2.70 GHz dual core CPU, 2 GB RAM) using the PHP command line

interpreter. Here are the results:

Solution #1 and #2 seem to be the worse ones. Solution #3 is good only when we need to

read a few lines. Solutions #4 and #5 seem to be the best ones.

Note how dynamic buffer size can optimize the algorithm: execution time is a little

smaller for few lines, because of the reduced buffer.

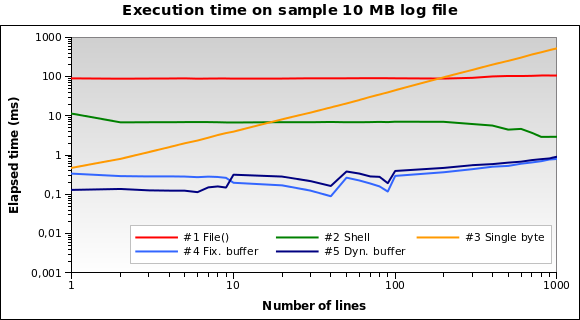

Let's try with a bigger file. What if we have to read a 10 MB log file?

Now solution #1 is by far the worse one: in fact, loading the whole 10 MB file

into memory is not a great idea. I run the tests also on 1MB and 100MB file,

and it's practically the same situation.

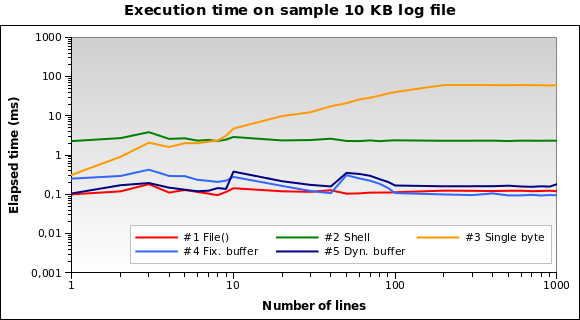

And for tiny log files? That's the graph for a 10 KB file:

Solution #1 is the best one now! Loading a 10 KB into memory isn't a big deal

for PHP. Also #4 and #5 performs good. However this is an edge case: a 10 KB log

means something like 150/200 lines...

You can download all my test files, sources and results

here.

Final thoughts

Solution #5 is heavily recommended for the general use case: works great

with every file size and performs particularly good when reading a few lines.

Avoid solution #1 if you

should read files bigger than 10 KB.

Solution #2

and #3

aren't the best ones for each test I run: #2 never runs in less than

2ms, and #3 is heavily influenced by the number of

lines you ask (works quite good only with 1 or 2 lines).

PHP: How to read a file live that is constantly being written to

You need to loop with sleep:

$file='/home/user/youfile.txt';

$lastpos = 0;

while (true) {

usleep(300000); //0.3 s

clearstatcache(false, $file);

$len = filesize($file);

if ($len < $lastpos) {

//file deleted or reset

$lastpos = $len;

}

elseif ($len > $lastpos) {

$f = fopen($file, "rb");

if ($f === false)

die();

fseek($f, $lastpos);

while (!feof($f)) {

$buffer = fread($f, 4096);

echo $buffer;

flush();

}

$lastpos = ftell($f);

fclose($f);

}

}

(tested.. it works)

Retrieve last 100 lines logs

You can use tail command as follows:

tail -100 <log file> > newLogfile

Now last 100 lines will be present in newLogfile

EDIT:

More recent versions of tail as mentioned by twalberg use command:

tail -n 100 <log file> > newLogfile

How to monitor Textfile and continuously output content in a textbox?

Check out the System.IO.FileSystemWatcher class:

public static Watch()

{

var watch = new FileSystemWatcher();

watch.Path = @"D:\tmp";

watch.Filter = "file.txt";

watch.NotifyFilter = NotifyFilters.LastAccess | NotifyFilters.LastWrite; //more options

watch.Changed += new FileSystemEventHandler(OnChanged);

watch.EnableRaisingEvents = true;

}

/// Functions:

private static void OnChanged(object source, FileSystemEventArgs e)

{

if(e.FullPath == @"D:\tmp\file.txt")

{

// do stuff

}

}

Edit: if you know some details about the file, you could handle the most efficent way to get the last line. For example, maybe when you read the file, you can wipe out what you've read, so next time it's updated, you just grab whatever is there and output. Perhaps you know one line is added at a time, then your code can immediately jump to the last line of the file. Etc.

Tail docker logs to see recent records, not all

Please read docker logs --help for help. Try below, starting from the last 10 lines. More details here.

docker logs -f --tail 10 container_name

Unix tail equivalent command in Windows Powershell

Use the -wait parameter with Get-Content, which displays lines as they are added to the file. This feature was present in PowerShell v1, but for some reason not documented well in v2.

Here is an example

Get-Content -Path "C:\scripts\test.txt" -Wait

Once you run this, update and save the file and you will see the changes on the console.

Related Topics

Can Linux Cat Command Be Used for Writing Text to File

In Unix, How to Run 'Make' in a Directory Without Cd'Ing to That Directory First

Trying to Search Files from User Keyword in Bash

Bash Script; How to Use Vars and Funcs Defined After Command

How to Move All Files Including Hidden Files into Parent Directory via *

Check Free Disk Space for Current Partition in Bash

Issue While Validating Bash Script

Bash - Find Files Older Than X Minutes and Move Them

Linux Sed Command - Using Variable with Backslash

Pass Command-Line Arguments to Grep as Search Patterns and Print Lines Which Match Them All

Bash Variable Assignment Not Working Expected

How to Force a Cifs Connection to Unmount

How to Redirect the Output of an Application in Background to /Dev/Null

Prompting for User Input in Assembly Ci20 Seg Fault

Passing Main Script Variables into Perl Modules

Executing String Sent from One Terminal in Another in Linux Pseudo-Terminal

Shell Script to Check If a Paragraph/Stream of Lines Exist in a File