Calculating weighted mean and standard deviation

R provides weighted mean. In fact, ?weighted.mean shows this example:

## GPA from Siegel 1994

wt <- c(5, 5, 4, 1)/15

x <- c(3.7,3.3,3.5,2.8)

xm <- weighted.mean(x, wt)

One more step:

v <- sum(wt * (x - xm)^2)

Calculate Weighted Average and Weighted Standard Deviation in DAX

Weighted Average and Standard deviation can be implemented in DAX according to their mathematical definition.

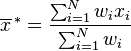

Assuming we have a table with the columns Weight and Value the formula for the Weighted Average is

WAvg =

VAR Num = SUMX( Samples, Samples[Weight] * Samples[Value] )

VAR Den = SUM( Samples[Weight] )

RETURN DIVIDE( Num, Den )

and the formula for the Weighted Standard Deviation is

WStdDev =

VAR WAvg = [WAvg]

VAR Num = SUMX( Samples, Samples[Weight] * (Samples[Value] - Wavg)^2 )

VAR Den = SUM( Samples[Weight] )

VAR WVar = DIVIDE( Num, Den )

RETURN SQRT(WVar)

Edit:

if I understand your new request, the Weight is the number of rows with the same Set Value, that is to be used for each of the Actual Value. Then, since there are two pairs of columns, I assume that the requirement is to have a set of measures per each couple of columns.

The formula requires to add a count of the number of rows per each group of Set Value, to be used as weight. I imported the sample table as table "V"

Weighted average for Set Value 1 and Actual Value 1

WAvg1 =

VAR Num =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 1] ) ) * V[Actual Value 1]

)

VAR Den =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 1] ) )

)

RETURN

DIVIDE( Num, Den )

Weighted average for Set Value 2 and Actual Value 2

WAvg2 =

VAR Num =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 2] ) ) * V[Actual Value 2]

)

VAR Den =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 2] ) )

)

RETURN

DIVIDE( Num, Den )

Weighted standard deviation for Set Value 1 and Actual Value 1

WStdDev1 =

VAR Num =

SUMX(

ALL( V ),

VAR WAvg = [WAvg1]

RETURN

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 1] ) ) * ( V[Actual Value 1] - WAvg ) ^ 2

)

VAR Den =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 1] ) )

)

VAR WVariance =

DIVIDE( Num, Den )

RETURN

SQRT( WVariance )

Weighted standard deviation for Set Value 2 and Actual Value 2

WStdDev2 =

VAR Num =

SUMX(

ALL( V ),

VAR WAvg = [WAvg2]

RETURN

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 2] ) ) * ( V[Actual Value 2] - WAvg ) ^ 2

)

VAR Den =

SUMX(

ALL( V ),

CALCULATE( COUNTROWS( V ), ALLEXCEPT( V, V[Set Value 2] ) )

)

VAR WVariance =

DIVIDE( Num, Den )

RETURN

SQRT( WVariance )

Applying these formulas to the sample table we get these results

How do I calculate the standard deviation between weighted measurements?

I just found this wikipedia page discussing data of equal significance vs weighted data. The correct way to calculate the biased weighted estimator of variance is

,

,

though the following, on-the-fly implementation, is more efficient computationally as it does not require calculating the weighted average before looping over the sum on the weighted differences squared

.

.

Despite my skepticism, I tried both and got the exact same results.

Note, be sure to use the weighted average

.

.

Getting weighted average and standard deviation on several columns in Pandas

You could use EOL's NumPy-based code

to calculate weighted averages and standard deviation. To use this in a Pandas groupby/apply operation, make weighted_average_std return a DataFrame:

import numpy as np

import pandas as pd

def weighted_average_std(grp):

"""

Based on http://stackoverflow.com/a/2415343/190597 (EOL)

"""

tmp = grp.select_dtypes(include=[np.number])

weights = tmp['Weight']

values = tmp.drop('Weight', axis=1)

average = np.ma.average(values, weights=weights, axis=0)

variance = np.dot(weights, (values - average) ** 2) / weights.sum()

std = np.sqrt(variance)

return pd.DataFrame({'mean':average, 'std':std}, index=values.columns)

np.random.seed(0)

df = pd.DataFrame({

"Date": pd.date_range(start='2018-01-01', end='2018-01-03 18:00:00', freq='6H'),

"Weight": np.random.uniform(3, 5, 12),

"V1": np.random.uniform(10, 15, 12),

"V2": np.random.uniform(10, 15, 12),

"V3": np.random.uniform(10, 15, 12)})

df.index = df["Date"]

df_agg = df.groupby(pd.Grouper(freq='1D')).apply(weighted_average_std).unstack(-1)

print(df_agg)

yields

mean std

V1 V2 V3 V1 V2 V3

Date

2018-01-01 12.105253 12.314079 13.566136 1.803014 1.725761 0.679279

2018-01-02 13.223172 12.534893 11.860456 1.709583 0.950338 1.153895

2018-01-03 13.782625 12.013557 12.105231 0.969099 1.189149 1.249064

How can I calculate weighted standard errors and plot them in a bar plot?

There isn't (as far as I know) a built-in R function to calculate the standard error of a weighted mean, but it is fairly straightforward to calculate - with some assumptions that are probably valid in the case you describe.

See, for instance:

https://en.wikipedia.org/wiki/Weighted_arithmetic_mean#Standard_error

Standard error of the weighted mean

If the elements used to calculate the weighted mean are samples from populations that all have the same variance v, then the variance of the weighted sample mean is estimated as:

var_m = v^2 * sum( wnorm^2 ) # wnorm = weights normalized to sum to 1

And the standard error of the weighted mean is equal to the square root of the variance.

sem = sqrt( var_m )

So, we need to calculate the sample variance from the weighted data.

Weighted variance

The weighted population variance (or biased sample variance) is calculated as:

pop_v = sum( w * (x-mean)^2 ) / sum( w )

However, if (as in the case you describe), we are working with samples taken from the population, rather then with the population itself, we need to make an adjustment to obtain an unbiased sample variance.

If the weights represent the frequencies of observations underlying each of the elements used to calculate the weighted mean & variance, then the adjustment is:

v = pop_v * sum( w ) / ( sum( w ) -1 )

However, this is not the case here, as the weights are the total frequenceis of 911 calls for each policeman, not the calls for each beat. So in this case the weights correspond to the reliabilities of each element, and the adjustment is:

v = pop_v * sum( w )^2 / ( sum( w )^2 - sum( w^2) )

weighted.var and weighted.sem functions

Putting all this together, we can define weighted.var and weighted.sem functions, similar to the base R weighted.mean function (note that several R packages, for instance "Hmisc", already include more-versatile functions to calculate the weighted variance):

weighted.var = function(x,w,type="reliability") {

m=weighted.mean(x,w)

if(type=="frequency"){ return( sum(w*(x-m)^2)/(sum(w)-1) ) }

else { return( sum(w*(x-m)^2)*sum(w)/(sum(w)^2-sum(w^2)) ) }

}

weighted.sem = function(x,w,...) { return( sqrt(weighted.var(x,w,...)*sum(w^2)/sum(w)^2) ) }

applied to 911 call data in the question

In the case of the question, the elements from which we want to calculate the weighted mean and weighted sem correspond to the proportions of calls in each beat, for each policeman.

So (finally...):

props = t(apply(df,1,function(row) row[-(1:3)]/row[3]))

wmean_props = apply(props,2,function(col) weighted.mean(col,w=df[,3]))

wsem_props = apply(props,2,function(col) weighted.sem(col,w=df[,3]))

Weighted standard deviation in NumPy

How about the following short "manual calculation"?

def weighted_avg_and_std(values, weights):

"""

Return the weighted average and standard deviation.

values, weights -- Numpy ndarrays with the same shape.

"""

average = numpy.average(values, weights=weights)

# Fast and numerically precise:

variance = numpy.average((values-average)**2, weights=weights)

return (average, math.sqrt(variance))

Related Topics

How to Count How Many Values Per Level in a Given Factor

Geom_Point() and Geom_Line() for Multiple Datasets on Same Graph in Ggplot2

Why Does Rendering a PDF from Rmarkdown Require Closing Rstudio Between Renders

Ggplot Graphing of Proportions of Observations Within Categories

How to Use a MACro Variable in R? (Similar to %Let in Sas)

Assign New Data Point to Cluster in Kernel K-Means (Kernlab Package in R)

Dynamically Add Function to R6 Class Instance

Replace Na with 0 in a Data Frame Column

How to Create a List of Vectors in Rcpp

Conditionally Display Block of Markdown Text Using Knitr

Adding Time to Posixct Object in R

Delete Columns Where All Values Are 0

How to Set Unique Row and Column Names of a Matrix When Its Dimension Is Unknown