VNFaceObservation BoundingBox Not Scaling In Portrait Mode

VNFaceObservation bounding box are normalised to processed image. From documentation.

The bounding box of detected object. The coordinates are normalized to

the dimensions of the processed image, with the origin at the image's

lower-left corner.

So you can use a simple calculation to find the correct size/frame for detected face like below.

let boundingBox = observation.boundingBox

let size = CGSize(width: boundingBox.width * imageView.bounds.width,

height: boundingBox.height * imageView.bounds.height)

let origin = CGPoint(x: boundingBox.minX * imageView.bounds.width,

y: (1 - observation.boundingBox.minY) * imageView.bounds.height - size.height)

then you can form the CAShapeLayer rect like below

layer.frame = CGRect(origin: origin, size: size)

Convert Vision boundingBox from VNFaceObservation to rect to draw on image

You have to do the transition and scale according to the image.

Example

func drawVisionRequestResults(_ results: [VNFaceObservation]) {

print("face count = \(results.count) ")

previewView.removeMask()

let transform = CGAffineTransform(scaleX: 1, y: -1).translatedBy(x: 0, y: -self.view.frame.height)

let translate = CGAffineTransform.identity.scaledBy(x: self.view.frame.width, y: self.view.frame.height)

for face in results {

// The coordinates are normalized to the dimensions of the processed image, with the origin at the image's lower-left corner.

let facebounds = face.boundingBox.applying(translate).applying(transform)

previewView.drawLayer(in: facebounds)

}

}

Incorrect frame of boundingBox with VNRecognizedObjectObservation

I use something like this:

let width = view.bounds.width

let height = width * 16 / 9

let offsetY = (view.bounds.height - height) / 2

let scale = CGAffineTransform.identity.scaledBy(x: width, y: height)

let transform = CGAffineTransform(scaleX: 1, y: -1).translatedBy(x: 0, y: -height - offsetY)

let rect = prediction.boundingBox.applying(scale).applying(transform)

This assumes portrait orientation and a 16:9 aspect ratio. It assumes the .imageCropAndScaleOption = .scaleFill.

Credits: The transform code was taken from this repo: https://github.com/Willjay90/AppleFaceDetection

Face detection swift vision kit

Hope you were able to use VNDetectFaceRectanglesRequest and able to detect faces. To show rectangle boxes there are lots of ways to achieve it. But simplest one would be using CAShapeLayer to draw layer on top your image for each face you detected.

Consider you have VNDetectFaceRectanglesRequest like below

let request = VNDetectFaceRectanglesRequest { [unowned self] request, error in

if let error = error {

// somthing is not working as expected

}

else {

// we got some face detected

self.handleFaces(with: request)

}

}

let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

do {

try handler.perform([request])

}

catch {

// catch exception if any

}

You can implement a simple method called handleFace for each face detected and use VNFaceObservation property to draw a CAShapeLayer.

func handleFaces(with request: VNRequest) {

imageView.layer.sublayers?.forEach { layer in

layer.removeFromSuperlayer()

}

guard let observations = request.results as? [VNFaceObservation] else {

return

}

observations.forEach { observation in

let boundingBox = observation.boundingBox

let size = CGSize(width: boundingBox.width * imageView.bounds.width,

height: boundingBox.height * imageView.bounds.height)

let origin = CGPoint(x: boundingBox.minX * imageView.bounds.width,

y: (1 - observation.boundingBox.minY) * imageView.bounds.height - size.height)

let layer = CAShapeLayer()

layer.frame = CGRect(origin: origin, size: size)

layer.borderColor = UIColor.red.cgColor

layer.borderWidth = 2

imageView.layer.addSublayer(layer)

}

}

More info can be found here in Github repo iOS-11-by-Examples

iOS Vision: Drawing Detected Rectangles on Live Camera Preview Works on iPhone But Not on iPad

In case it helps anyone else, based on the info posted by Mr.SwiftOak's comment, I was able to resolve the problem through a combination of changing the preview layer to scale as .resizeAspect, rather than .resizeAspectFill, preserving the ratio of the raw frame in the preview. This led to the preview no longer taking up the full iPad screen, but made it a lot simpler to overlay accurately.

I then drew the rectangles as a .overlay to the preview window, so that the drawing coords are relative to the origin of the image (top left) rather than the view itself, which has an origin at (0, 0) top left of the entire screen.

To clarify on how I've been drawing the rects, there are two parts:

Converting the detect rect bounding boxes into paths on CAShapeLayers:

let boxPath = CGPath(rect: bounds, transform: nil)

let boxShapeLayer = CAShapeLayer()

boxShapeLayer.path = boxPath

boxShapeLayer.fillColor = UIColor.clear.cgColor

boxShapeLayer.strokeColor = UIColor.yellow.cgColorboxLayers.append(boxShapeLayer)

Appending the layers in the updateUIView of the preview UIRpresentable:

func updateUIView(_ uiView: VideoPreviewView, context: Context)

{

if let rectangles = self.viewModel.rectangleDrawings {

for rect in rectangles {

uiView.videoPreviewLayer.addSublayer(rect)

}

}

}

Bounding Box from VNDetectRectangleRequest is not correct size when used as child VC

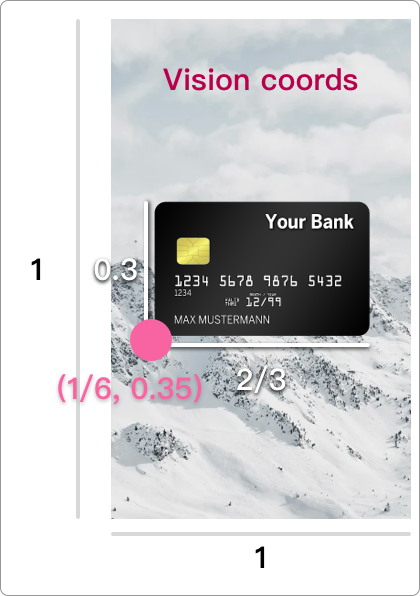

First let's look at boundingBox, which is a "normalized" rectangle. Apple says

The coordinates are normalized to the dimensions of the processed image, with the origin at the image's lower-left corner.

This means that:

- The

originis at the bottom-left, not the top-left - The

origin.xandwidthare in terms of a fraction of the entire image's width - The

origin.yandheightare in terms of a fraction of the entire image's height

Hopefully this diagram makes it clearer:

| What you are used to | What Vision returns |

|---|---|

|  |

Related Topics

Sirikit, How to Display Response for Start Workout Intent

Ambiguous Use of Registerclass with Swift

How to Catch Accessibility Focus Changed

Swift: Nil Is Incompatible with Return Type String

How to Go Back to Rootviewcontroller from Presentview Controller

Ksecattrkeytypeec Causing Encryptmessagewithpublickey() to Fail

Creating an Irregular Uibutton in Swift Where Transparent Parts Are Not Tappable

Swift:Background Color Fading Animation (Spritekit)

Exception: Cannot Manually Set the Delegate on a Uinavigationbar Managed by a Controller

Facebook Sdk Login Throws Error in Swift 2 iOS 9

Swift3 Random Extension Method

Swift: Terminating with Uncaught Exception of Type Nsexception

Replace C Style For-Loop in Swift 2.2.1

How Does Uibutton Addtarget Self Work