How can I optimize this SQL query (Using Indexes)?

Intro: There is a lot to talk about here, and because of the complexity of SQL, it's going to be impossible for anyone to help with your query fully – it matters what your Query is, how large the tables are, and what the database system being used is. If you don’t know what indexes are, or how to use them, see here: How does database indexing work?.

Precaution: Again, if you have a DBA for your system, check with them before indexing anything, especially on a live system. They can even help, if you're nice to them. If the system is used by many others, be careful before changing anything like indexes. If the data is being used for multiple query types, make sure you aren't creating tons of indexes on them that conflict or overlap.

Syntax. The standard (SQL92) uses: CREATE INDEX [index name] ON [table name] ( [column name] ). This syntax should work on almost any system. If you need only one index on the table, and there is not already a clustered index, you can use: CREATE [Unique] Clustered INDEX [index name] ON [table name] ( [column name] ) - it should be unique if there cannot be multiple items with the same values. If you can't get this to work, see this post for more details: How do I index a database column.

Which tables should be indexed? Any table that is being used for querying, especially if the data is static or only gets new values, is a great candidate. If the table is in your query, and has a join statement, you probably want to have an index on the column(s) being joined.

What columns should be indexed? There are full books written on choosing the best indexes, and how to properly index a database. A basic rule of thumb for indexing, if you don't want to dive deep into the problem, is: index by the following, in this order:

- Join predicates (

on Table1.columnA=Table2.ColumnA and Table1.columnB=Table2.ColumnQ) - Filtered columns (

where Table1.columnN=’Bob’ and Table1.columnS<20) - Order by / Group By / etc. (any column which is used for the order/grouping should be in the index, if possible.)

Also:

- Use data types that make sense - store nothing as varchar if it's an integer or date. (Column width matters. Use the smallest data type you can, if possible.)

- Make sure your joins are the same data type - int to int, varchar to varchar, and so on.

- If possible, use unique, non-null indexes on each join predicate in each tables.

Make sure whatever columns possible are non-null. (If they cannot contain null values, you can use the following syntax.

Alter table Table1

alter column columnN int not null

Do all of this, and you'll be well on your way. But if you need this stuff regularly, learn it! Buy a book, read online, find the information. There is a lot of information out there, and it is a deep topic, but you can make queries MUCH better if you know what you are doing.

How does database indexing work?

Why is it needed?

When data is stored on disk-based storage devices, it is stored as blocks of data. These blocks are accessed in their entirety, making them the atomic disk access operation. Disk blocks are structured in much the same way as linked lists; both contain a section for data, a pointer to the location of the next node (or block), and both need not be stored contiguously.

Due to the fact that a number of records can only be sorted on one field, we can state that searching on a field that isn’t sorted requires a Linear Search which requires (N+1)/2 block accesses (on average), where N is the number of blocks that the table spans. If that field is a non-key field (i.e. doesn’t contain unique entries) then the entire tablespace must be searched at N block accesses.

Whereas with a sorted field, a Binary Search may be used, which has log2 N block accesses. Also since the data is sorted given a non-key field, the rest of the table doesn’t need to be searched for duplicate values, once a higher value is found. Thus the performance increase is substantial.

What is indexing?

Indexing is a way of sorting a number of records on multiple fields. Creating an index on a field in a table creates another data structure which holds the field value, and a pointer to the record it relates to. This index structure is then sorted, allowing Binary Searches to be performed on it.

The downside to indexing is that these indices require additional space on the disk since the indices are stored together in a table using the MyISAM engine, this file can quickly reach the size limits of the underlying file system if many fields within the same table are indexed.

How does it work?

Firstly, let’s outline a sample database table schema;

Field name Data type Size on disk

id (Primary key) Unsigned INT 4 bytes

firstName Char(50) 50 bytes

lastName Char(50) 50 bytes

emailAddress Char(100) 100 bytes

Note: char was used in place of varchar to allow for an accurate size on disk value.

This sample database contains five million rows and is unindexed. The performance of several queries will now be analyzed. These are a query using the id (a sorted key field) and one using the firstName (a non-key unsorted field).

Example 1 - sorted vs unsorted fields

Given our sample database of r = 5,000,000 records of a fixed size giving a record length of R = 204 bytes and they are stored in a table using the MyISAM engine which is using the default block size B = 1,024 bytes. The blocking factor of the table would be bfr = (B/R) = 1024/204 = 5 records per disk block. The total number of blocks required to hold the table is N = (r/bfr) = 5000000/5 = 1,000,000 blocks.

A linear search on the id field would require an average of N/2 = 500,000 block accesses to find a value, given that the id field is a key field. But since the id field is also sorted, a binary search can be conducted requiring an average of log2 1000000 = 19.93 = 20 block accesses. Instantly we can see this is a drastic improvement.

Now the firstName field is neither sorted nor a key field, so a binary search is impossible, nor are the values unique, and thus the table will require searching to the end for an exact N = 1,000,000 block accesses. It is this situation that indexing aims to correct.

Given that an index record contains only the indexed field and a pointer to the original record, it stands to reason that it will be smaller than the multi-field record that it points to. So the index itself requires fewer disk blocks than the original table, which therefore requires fewer block accesses to iterate through. The schema for an index on the firstName field is outlined below;

Field name Data type Size on disk

firstName Char(50) 50 bytes

(record pointer) Special 4 bytes

Note: Pointers in MySQL are 2, 3, 4 or 5 bytes in length depending on the size of the table.

Example 2 - indexing

Given our sample database of r = 5,000,000 records with an index record length of R = 54 bytes and using the default block size B = 1,024 bytes. The blocking factor of the index would be bfr = (B/R) = 1024/54 = 18 records per disk block. The total number of blocks required to hold the index is N = (r/bfr) = 5000000/18 = 277,778 blocks.

Now a search using the firstName field can utilize the index to increase performance. This allows for a binary search of the index with an average of log2 277778 = 18.08 = 19 block accesses. To find the address of the actual record, which requires a further block access to read, bringing the total to 19 + 1 = 20 block accesses, a far cry from the 1,000,000 block accesses required to find a firstName match in the non-indexed table.

When should it be used?

Given that creating an index requires additional disk space (277,778 blocks extra from the above example, a ~28% increase), and that too many indices can cause issues arising from the file systems size limits, careful thought must be used to select the correct fields to index.

Since indices are only used to speed up the searching for a matching field within the records, it stands to reason that indexing fields used only for output would be simply a waste of disk space and processing time when doing an insert or delete operation, and thus should be avoided. Also given the nature of a binary search, the cardinality or uniqueness of the data is important. Indexing on a field with a cardinality of 2 would split the data in half, whereas a cardinality of 1,000 would return approximately 1,000 records. With such a low cardinality the effectiveness is reduced to a linear sort, and the query optimizer will avoid using the index if the cardinality is less than 30% of the record number, effectively making the index a waste of space.

What is an index in SQL?

An index is used to speed up searching in the database. MySQL have some good documentation on the subject (which is relevant for other SQL servers as well):

http://dev.mysql.com/doc/refman/5.0/en/mysql-indexes.html

An index can be used to efficiently find all rows matching some column in your query and then walk through only that subset of the table to find exact matches. If you don't have indexes on any column in the WHERE clause, the SQL server has to walk through the whole table and check every row to see if it matches, which may be a slow operation on big tables.

The index can also be a UNIQUE index, which means that you cannot have duplicate values in that column, or a PRIMARY KEY which in some storage engines defines where in the database file the value is stored.

In MySQL you can use EXPLAIN in front of your SELECT statement to see if your query will make use of any index. This is a good start for troubleshooting performance problems. Read more here:

http://dev.mysql.com/doc/refman/5.0/en/explain.html

How to Optimize Queries in a Database - The Basics

You have to do a look up for every where condition and for every join...on condition. The two work the same.

Suppose we write

select name

from customer

where customerid=37;

Somehow the DBMS has to find the record or records with customerid=37. If there is no index, the only way to do this is to read every record in the table comparing the customerid to 37. Even when it finds one, it has no way of knowing there is only one, so it has to keep looking for others.

If you create an index on customerid, the DBMS has ways to search the index very quickly. It's not a sequential search, but, depending on the database, a binary search or some other efficient method. Exactly how doesn't matter, accept that it's much faster than sequential. The index then takes it directly to the appropriate record or records. Furthermore, if you specify that the index is "unique", then the database knows that there can only be one so it doesn't waste time looking for a second. (And the DBMS will prevent you from adding a second.)

Now consider this query:

select name

from customer

where city='Albany' and state='NY';

Now we have two conditions. If you have an index on only one of those fields, the DBMS will use that index to find a subset of the records, then sequentially search those. For example, if you have an index on state, the DBMS will quickly find the first record for NY, then sequentially search looking for city='Albany', and stop looking when it reaches the last record for NY.

If you have an index that includes both fields, i.e. "create index on customer (state, city)", then the DBMS can immediately zoom to the right records.

If you have two separate indexes, one on each field, the DBMS will have various rules that it applies to decide which index to use. Again, exactly how this is done depends on the particular DBMS you are using, but basically it tries to keep statistics on the total number of records, the number of different values, and the distribution of values. Then it will search those records sequentially for the ones that satisfy the other condition. In this case the DBMS would probably observe that there are many more cities than there are states, so by using the city index it can quickly zoom to the 'Albany' records. Then it will sequentially search these, checking the state of each against 'NY'. If you have records for Albany, California these will be skipped.

Every join requires some sort of look-up.

Say we write

select customer.name

from transaction

join customer on transaction.customerid=customer.customerid

where transaction.transactiondate='2010-07-04' and customer.type='Q';

Now the DBMS has to decide which table to read first, select the appropriate records from there, and then find the matching records in the other table.

If you had an index on transaction.transactiondate and customer.customerid, the best plan would likely be to find all the transactions with this date, and then for each of those find the customer with the matching customerid, and then verify that the customer has the right type.

If you don't have an index on customer.customerid, then the DBMS could quickly find the transaction, but then for each transaction it would have to sequentially search the customer table looking for a matching customerid. (This would likely be very slow.)

Suppose instead that the only indexes you have are on transaction.customerid and customer.type. Then the DBMS would likely use a completely different plan. It would probably scan the customer table for all customers with the correct type, then for each of these find all transactions for this customer, and sequentially search them for the right date.

The most important key to optimization is to figure out what indexes will really help and create those indexes. Extra, unused indexes are a burden on the database because it takes work to maintain them, and if they're never used this is wasted effort.

You can tell what indexes the DBMS will use for any given query with the EXPLAIN command. I use this all the time to determine if my queries are being optimized well or if I should be creating additional indexes. (Read the documentation on this command for an explanation of its output.)

Caveat: Remember that I said that the DBMS keeps statistics on the number of records and the number of different values and so on in each table. EXPLAIN may give you a completely different plan today than it gave yesterday if the data has changed. For example, if you have a query that joins two tables and one of these tables is very small while the other is large, it will be biased toward reading the small table first and then finding matching records in the large table. Adding records to a table can change which is larger, and thus lead the DBMS to change its plan. Thus, you should attempt to do EXPLAINS against a database with realistic data. Running against a test database with 5 records in each table is of far less value than running against a live database.

Well, there's much more that could be said, but I don't want to write a book here.

What indexes optimize this query with four joins?

It not always work, but try to:

- Reorder tables in joins from the smallest one to the biggest one.

Use subquery instead of

ProjectTransactiontable:JOIN

(SELECT RefEmployeeID, RefProjectID FROM ProjectTransaction WHERE @from <= PTran.Date AND PTran.Date <= @to AND PTran.Type = 0) AS trans

How to optimize MySQL queries with many combinations of where conditions?

Well, the file (it is not a table) is not at all Normalised. Therefore no amount indices on combinations of fields will help the queries.

Second, MySQL is (a) not compliant with the SQL requirement, and (b) it does not have a Server Architecture or the features of one.

- Such a Statistics, which is used by a genuine Query Optimiser, which commercial SQL platforms have. The "single index" issue you raise in the comments does not apply.

Therefore, while we can fix up the table, etc, you may never obtain the performance that you seek from the freeware.

Eg. in the commercial world, 6M rows is nothing, we worry when we get to a billion rows.

Eg. Statistics is automatic, we have to tweak it only when necessary: an un-normalised table or billions of rows.

Or ... should I use other middlewares , such as Elasticsearch ?

It depends on the use of genuine SQL vs MySQL, and the middleware.

If you fix up the file and make a set of Relational tables, the queries are then quite simple, and fast. It does not justify a middleware search engine (that builds a data cube on the client system).

If they are not fast on MySQL, then the first recommendation would be to get a commercial SQL platform instead of the freeware.

The last option, the very last, is to stick to the freeware and add a big fat middleware search engine to compensate.

Or is it good to create 10 tables which have data of category_1~10, and execute many INNER JOIN in the queries?

Yes. JOINs are quite ordinary in SQL. Contrary to popular mythology, a normalised database, which means many more tables than an un-normalised one, causes fewer JOINs, not more JOINs.

So, yes, Normalise that beast. Ten tables is the starting perception, still not at all Normalised. One table for each of the following would be a step in the direction of Normalised:

Item

Item_idwill be unique.Category

This is notcategory-1, etc, but each of the values that are incategory_1, etc. You must not have multiple values in a single column, it breaks 1NF. Such values will be (a) Atomic, and (b) unique. The Relational Model demands that the rows are unique.The meaning of

category_1, etc inItemis not given. (If you provide some example data, I can improve the accuracy of the data model.) Obviously it is not [2].

.

If it is a Priority (1..10), or something similar, that the users have chosen or voted on, this table will be a table that supplies the many-to-many relationship betweenItemandCategory, with aPriorityfor each row.

.

Let's call it Poll. The relevant Predicates would be something like:

Each Poll is 1 Item

Each Poll is 1 Priority

Each Poll is 1 CategoryLikewise,

sort_scoreis not explained. If it is even remotely what it appears to be, you will not need it. Because it is a Derived Value. That you should compute on the fly: once the tables are Normalised, the SQL required to compute this is straight-forward. Not one that you compute-and-store every 5 minutes or every 10 seconds.

The Relational Model

The above maintains the scope of just answering your question, without pointing out the difficulties in your file. Noting the Relational Database tag, this section deals with the Relational errors.

The

Record IDfield (item_idorcategory_idis yours) is prohibited in the Relational Model. It is a physical pointer to a record, which is explicitly the very thing that the RM overcomes, and that is required to be overcome if one wishes to obtain the benefits of the RM, such as ease of queries, and simple, straight-forward SQL code.Conversely, the

Record IDis always one additional column and one additional index, and the SQL code required for navigation becomes complex (and buggy) very quickly. You will have enough difficulty with the code as it is, I doubt you would want the added complexity.Therefore, get rid of the

Record IDfields.The Relational Model requires that the Keys are "made up from the data". That means something from the logical row, that the users use. Usually they know precisely what identifies their data, such as a short name.

- It is not manufactured by the system, such as a

RecordIDfield which is aGUIDorAUTOINCREMENT, which the user does not see. Such fields are physical pointers to records, not Keys to logical rows. Such fields are pre-Relational, pre-DBMS, 1960's Record Filing Systems, the very thing that RM superseded. But they are heavily promoted and marketed as "relational.

- It is not manufactured by the system, such as a

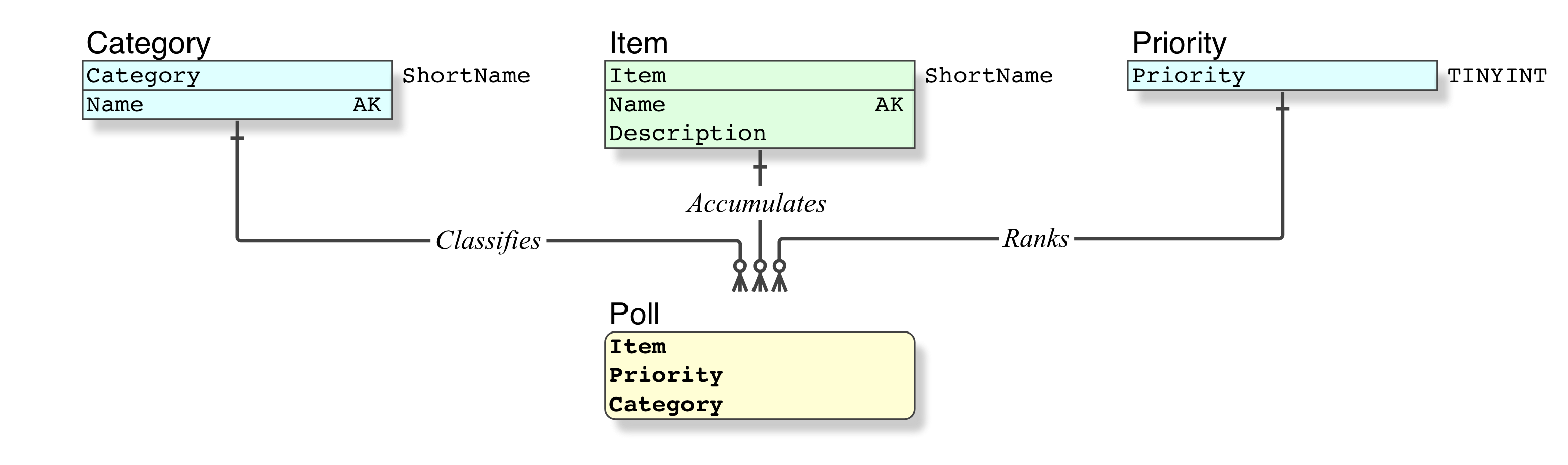

Relational Data Model • Initial

Looks like this.

All my data models are rendered in IDEF1X, the Standard for modelling Relational databases since 1993

My IDEF1X Introduction is essential reading for beginners.

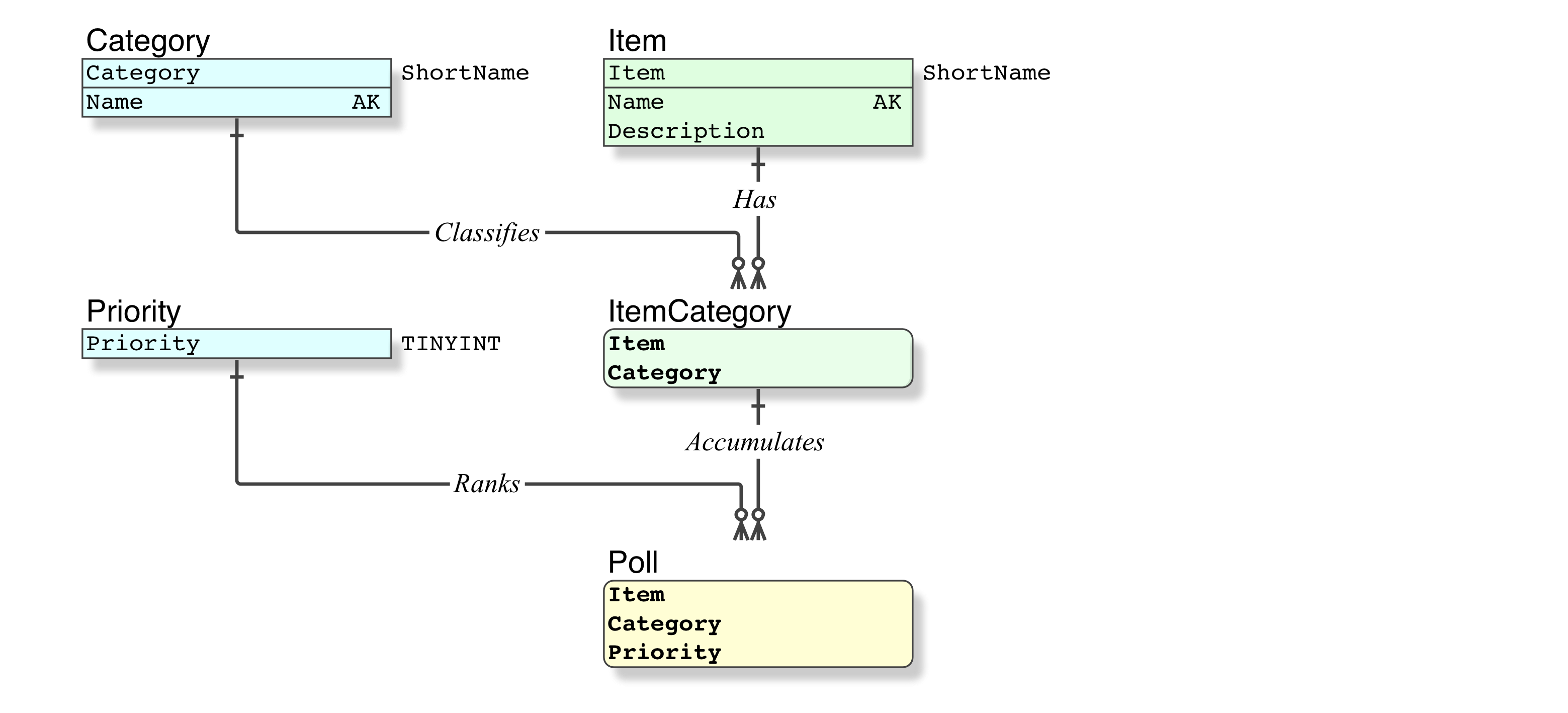

Relational Data Model • Improved

Ternary relations (aka three-way JOINs) are known to be a problem, indicating that further Normalisation is required. Codd teaches that every ternary relation can be reduced to two binary relations.

In your case, perhaps a Item has certain, not all, Categories. The above implements Polls of Items allowing all Categories for each Item, which is typical error in a ternary relation, which is why it requires further Normalisation. It is also the classic error in every RFS file.

The corrected model would therefore be to establish the Categories for each Item first as ItemCategory, your "item can have several numbers of category_x". And then to allow Polls on that constrained ItemCategory. Note, this level of constraining data is not possible in 1960' Record Filing Systems, in which the "key" is a fabricated id field:

Each ItemCategory is 1 Item

Each ItemCategory is 1 Category

Each Poll is 1 Priority

Each Poll is 1 ItemCategory

Your indices are now simple and straight-forward, no additional indices are required.

Likewise your query code will now be simple and straight-forward, and far less prone to bugs.

Please make sure that you learn about Subqueries. The Poll table supports any type of pivoting that may be required.

What generic techniques can be applied to optimize SQL queries?

- Use primary keys

- Avoid select *

- Be as specific as you can when building your conditional statements

- De-normalisation can often be more efficient

- Table variables and temporary tables (where available) will often be better than using a large source table

- Partitioned views

- Employ indices and constraints

Optimize SELECT MySql query using INDEXING

I started to write this in a comment because these are hints and not a clear answer. But that's way too long

First of all, it is common sense (but not always a rule of thumb) to index the columns appearing in a WHERE clause :

playing_date BETWEEN '' AND ''

AND country_code LIKE ''

AND device_report_tag LIKE ''

AND channel_report_tag LIKE ''

If your columns have a very high cardinality (your tag columns???), it's probably not a good idea to index them. Country_code and playing_date should be indexed.

The issue here is that there are so many LIKE in your query. This operator is perf a killer and you are using it on 3 columns. That's awfull for the database. So the question is: Is that really needed?

For instance I see no obvious reason to make a LIKE on a country code. Will you really query like this :

AND country_code LIKE 'U%'

To retrieve UK and US ??

You probably won't. Chances are high that you will know the countries for which you are searching for, so you should do this instead :

AND country_code IN ('UK','US')

Which will be a lot faster if the country column is indexed

Next, If you really want to make LIKE on your 2 tag columns, instead of doing a LIKE you can try this

AND MATCH(device_report_tag) AGAINST ('anything*' IN BOOLEAN MODE)

It is also possible to index your tag columns as FULLTEXT, especially if you search with LIKE ='anything%'. I you search with LIKE='%anything%', the index won't probably help much.

I could also state that with millions rows a day, you might have to PARTITION your tables (on the date for instance). And following your data, a composite index on the date and something else might help.

Really, there's no simple and straight answer to your complex question, especially with what you shown (not a lot).

Related Topics

Select Where Count of One Field Is Greater Than One

How to Sort a Linked List in SQL

Export All Ms Access SQL Queries to Text Files

Extracting the Total Number of Seconds from an Interval Data-Type

Join Statement Order of Operation

Sum Columns with Null Values in Oracle

Can There Be Constraints with the Same Name in a Db

Pairwise Array Sum Aggregate Function

How to Pass a Comma Separated List to a Stored Procedure

Select Databases Which Only Contain Specific Table

Oracle Convert Seconds to Hours:Minutes:Seconds

Join Two Spreadsheets on a Common Column in Excel or Openoffice

Update Statement Using Nested Query

How to See Generated SQL from a Linq Query

Upgrading a Varchar Column to Enum Type in Postgresql