Derived concepts - database design considerations

Here's an example of the "standard type/subtype" model noted by @Philip Kelley above:

You've got

SupertypeType. The domain table that constrains the domain of the type identifier.

Supertype. The common supertype. A row exists in this table for every instance of one of the subtype instances. It contains the object id (SupertypeID), the object type identifier (TypeID), and the attributes common across all subtypes.

Subtype. A table exists for each subtype. Its primary key is the object id of the supertype table. For each instance of the supertype, no more than one row exists across all the subtype tables (there may be no rows, of course, if the instance in question is of the base (super) type. Each subtype table varies, containing the attributes unique to that particular subtype.

To enumerate all elements, query only the supertype table.

If you know that you're only interesting in a particular subtype, you may simply select from the appropriate subtype table, joining against the supertype table as needed to get whatever common attributes you need.

Addendum. If you need a flat, denormalized view of the entire set, simply left join across the subtypes:

select *

from Supertype t

left join Subtype1 t1 on t1.SupertypeID = t.SupertypeID

left join Subtype2 t2 on t2.SupertypeID = t.SupertypeID

left join Subtype3 t3 on t3.SupertypeID = t.SupertypeID

Now you don't need multiple queries. You've traded that for having to deal with nullity.

What is a good design for a derived database structure?

Your design resembles a design pattern known as Class Table Inheritance. You can visit the tag with the same name right here, or you can see Martin Fowler's treatment of the same topic by searching on the web.

There is one small change I would suggest for you. It may or may not work in your case. It's called "Shared Primary Key". You get rid of DogId and FishId. Instead, you make AminalID the PK for the dog and fish tables as well as the animal table. Note that AnimalID will be BOTH a PK and an FK. And you, of course, have to make it a true copy of the PK from Animal.

This has several advantages, among them simplicity and speed. But the big advantage is that it enforces the one-to-one nature of the IS-A relationship between each subclass and the superclass.

Database Design for multiple tables

First, you want to look into Type / SubType database patterns—search for SO answers on those topics, or online for detailed articles. Here are two such questions I posted to in the past:

Foreign Key Referencing Multiple Tables

Derived concepts - database design considerations

The general idea is you have a “master” table that defines all possible condiments, then you have another table for each type of condiment, where each as the “master condiment” table as it’s parent. The details are subtle and the implementation can be tricky.

Second, what your describing as “parent_condiment_id” sounds more like “condiment_order”, an attribute of the join between hamburger and condiments. (Or does it actually reference another table?)

Database Design for Storing Checklists and Results

You basically need to keep your tasks and checklists read-only. Any modification means a new task or checklist ID. Otherwise, from a design point of view, you should be fine with the model.

Derived account balance vs stored account balance for a simple bank account?

Preface

There is an objective truth: Audit requirements. Additionally, when dealing with public funds, there is Legislature that must be complied with.

You don't have to implement the full accounting requirement, you can implement just the parts that you need.

Conversely, it would be ill-advised to implement something other than the standard accounting requirement (the parts thereof) because that guarantees that when the number of bugs or the load exceeds some threshold, or the system expands,you will have to re-implement. A cost that can, and therefore should, be avoided.

It also needs to be stated: do not hire an unqualified, un-accredited "auditor". There will be consequences, the same as if you hired an unqualified developer. It might be worse, if the Tax Office fines you.

Method

The Standard Accounting method in not-so-primitive countries is this. The "best practice", if you will, in others.

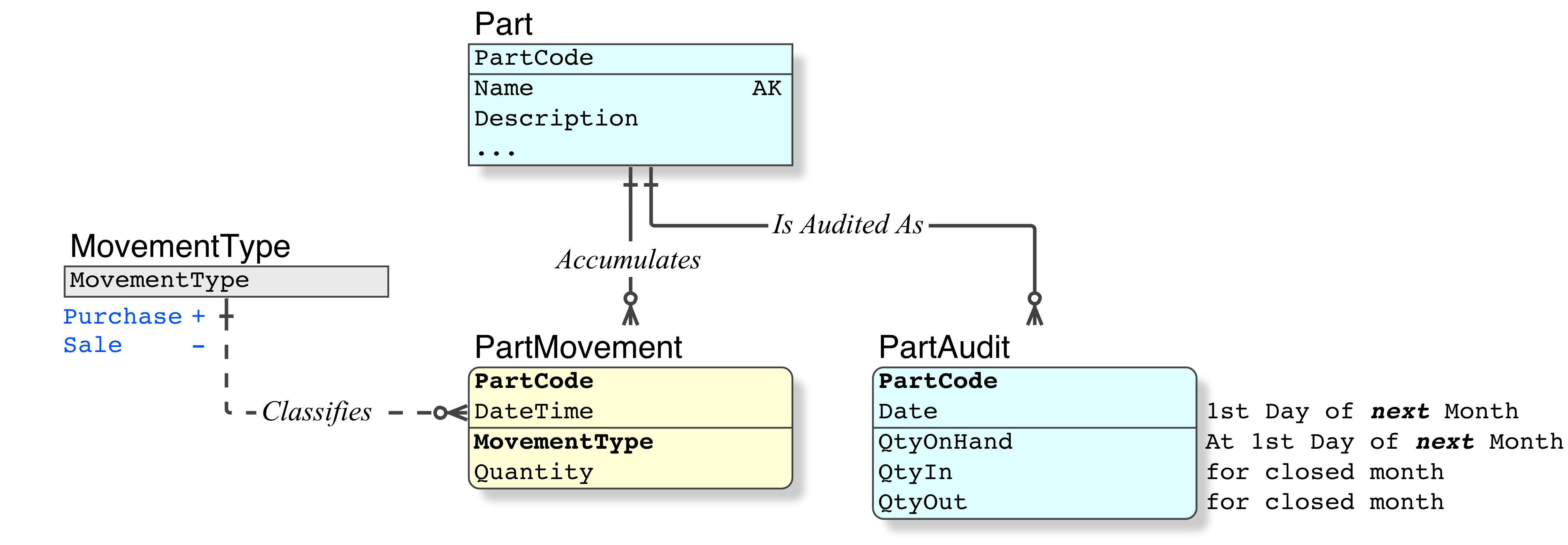

This method applies to any system that has similar operations; needs; historic monthly figures vs current-month requirements, such as Inventory Control, etc.

Consideration

First, the considerations.

Never duplicate data.

If theCurrent Balancecan be derived (and here it is simple, as you note), do not duplicate it with a summary column.- Such a column is a duplication of data. It breaks Normalisation rules.

- Further, it creates an

Update Anomaly, which otherwise does not exist.

If you do use a summary column, when a new

AccountTransactionis inserted, the summary columnCurrent Balancevalue is rendered obsolete, therefore it must be updated all the time anyway. That is the consequence of theUpdate Anomaly. Which eliminates the value of having it.External publication.

Separate point. If the balance is published, as in a monthly Bank Statement, such documents usually have legal restrictions and implications, thus that published Current Balance value must not change after publication.Any change, after the publication date, in the database, of a figure that is published externally, is evidence of dishonest conduct, fraud, etc.

- Such an act, attempting to change published history, is the hallmark of a novice. Novices and mental patients will insist that history can be changed. But as everyone should know, ignorance of the law does not constitute a valid defence.

You wouldn't want your bank, in Apr 2015, to change the Current Balance that they published in their Bank Statement to you of Dec 2014.

That figure has to be viewed as an Audit figure, published and unchangeable.

To correct an erroroneous

AccountTransactionthat was made in the past, that is being corrected in the present, the correction or adjustment that is necessary, is made as a newAccountTransactionin the current month (even though it applies to some previous month or duration).This is because that applicable-to month is closed; Audited; and published, because one cannot change history after it has happened and it has been recorded. The only effective month is the current one.

For interest-bearing systems, etc, in not-so-primitive countries, when an error is found, and it has an historic effect (eg. you find out in Apr 2015 that the interest calculated monthly on a security has been incorrect, since Dec 2014), the value of the corrected interest payment/deduction is calculated today, for the number of days that were in error, and the sum is inserted as a

AccountTransactionin the current month. Again, the only effective month is the current one.And of course, the interest rate for the security has to be corrected as well, so that that error does not repeat.

The same principles apply to Inventory control systems. It maintains sanity.

All real accounting systems (ie. those that are accredited by the Audit Authority in the applicable country, as opposed to the mickey mouse "packages" that abound) use a Double Entry Accounting system for all

AccountTransactions, precisely because it prevents a raft of errors, the most important of which is, funds do not get "lost". That requires a General Ledger and Double-Entry Accounting.- You have not asked for that, you do not need that, therefore I am not describing it here. But do remember it, in case money goes "missing", because that is what you will have to implement, not some band-aid solution; not yet another unaccredited "package".

This Answer services the Question that is asked, which is not Double-Entry Accounting.

For a full treatment of that subject (detailed data model; examples of accounting Transactions; rows affected; and SQL code examples), refer to this Q&A:

Relational Data Model for Double-Entry Accounting.

- The major issues that affect performance are outside the scope of this question, but to furnish a short and determinant answer: it is dependent on:

Whether you implement a genuine Relational Database or not (eg. a 1960's Record Filing System, which is characterised by

Record IDs, deployed in an SQL container for convenience).whether you us a genuine SQL Platform (architected; stable; reliable; SQL-compliant; OLTP; etc) or the pretend-SQL freeware (herd of programs; ever-changing; no complaince; scales like a fish.

The use of genuine Relational Keys, etc, will maintain high performance, regardless of the population of the tables.

Conversely, an RFS will perform badly, they simply cannot perform. "Scale" when used in the context of an RFS, is a fraudulent term: it hides the cause and seeks to address everything but the cause. Most important, such systems have none of the Relational Integrity; the Relational Power; or the Relational Speed, of a Relational DBMS.

Implementation

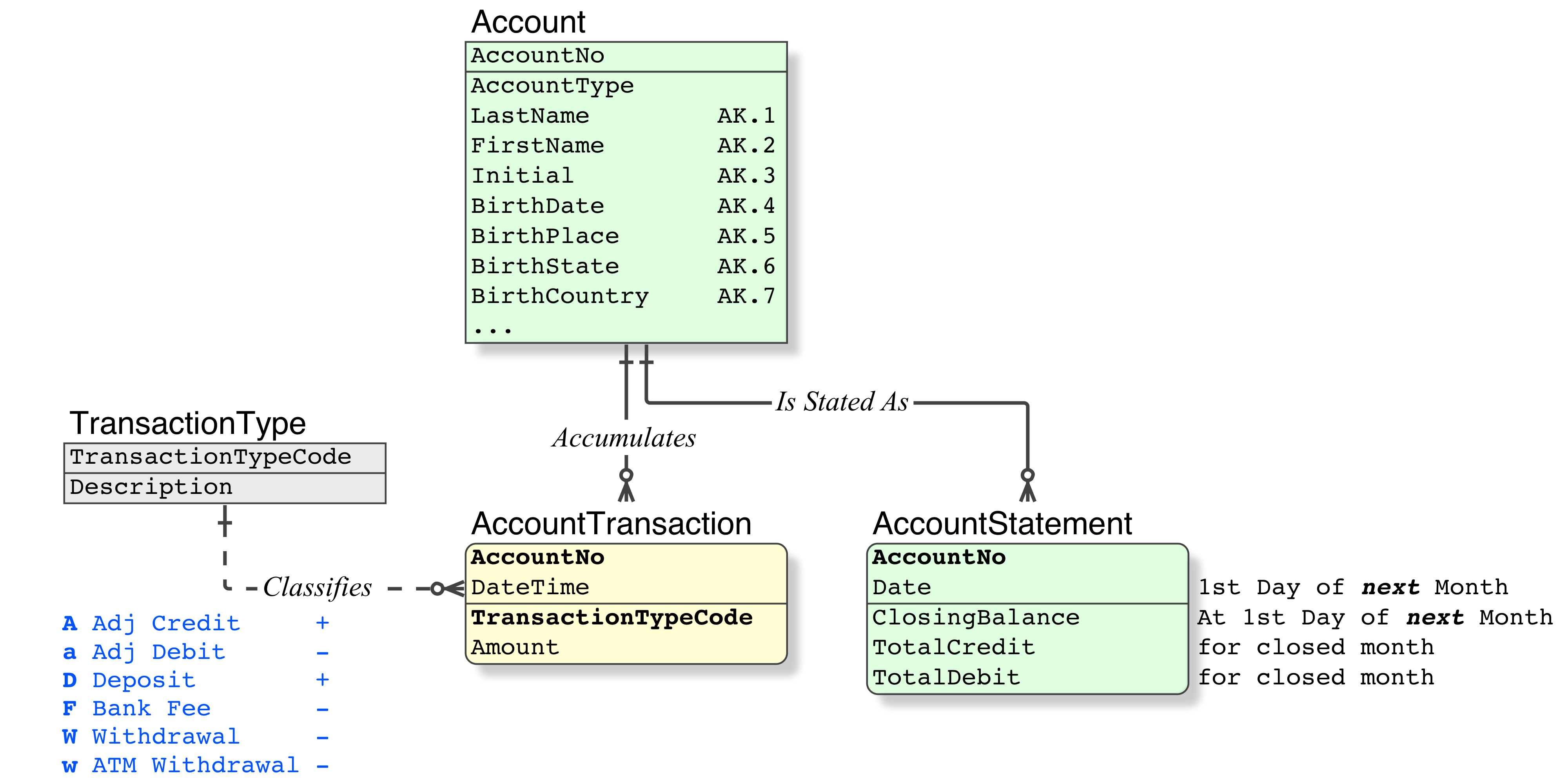

Relational Data Model • Bank Account

Relational Data Model • Inventory

Notation

All my data models are rendered in IDEF1X, the Standard for modelling Relational databases since 1993.

My IDEF1X Introduction is essential reading for those who are new to the Relational Model, or its modelling method. Note that IDEF1X models are rich in detail and precision, showing all required details, whereas home-grown models have far less than that. Which means, the notation has to be understood.

Content

For each

AccountNo, there will be oneAccountStatementrow per per month, with aClosingBalance; StatementDate(usually the first day of the next month) and other Statement details for the closed month.This is not a "duplicate" or a derivable value that is stored because (a) the value applies to just one

Date, (b) it is demanded for Audit and sanity purposes, and (c) provides a substantial performance benefit (elimination of the SUM( all transactions ) ).For Inventory, for each

PartCode, there will be onePartAuditrow per month, with aQtyOnHandcolumn.It has an additional value, in that it constrains the scope of the Transaction rows required to be queried to the current month

Again, if your table is Relational and you have an SQL Platform, the Primary Key for

AccountTransactionwill be (AccountNo, TransactionDateTime) which will retrieve the Transactions at millisecond speeds.Whereas for a Record Filing System, the "primary key" will be

AccountTransactionID, and you will be retrieving the current month by Transaction Date, which may or may not be indexed correctly, and the rows required will be spread across the file. In any case at far less than ClusteredIndex speeds, and due to the spread, it will incur a tablescan.

The

AccountTransactiontable remains simple (the real world notion of a bank account Transaction is simple). It has a single positiveAmountcolumn.For each

Account, theCurrentBalanceis:the

AccountStatement.ClosingBalanceof the previous month, dated the first of the next month for convenience- for inventory, the

PartAudit.QtyOnHand

- for inventory, the

plus the

SUM( Transaction.Amounts )in the current month, where theAccountTransactionTypeindicates a deposit- for inventory, the

PartMovement.Quantity

- for inventory, the

minus the

SUM( Transaction.Amount )in the current month, where theAccountTransactionTypeindicates a withdrawal(code provided below).

In this Method, the

AccountTransactionsin the current month, only, are in a state of flux, thus they must be retrieved. All previous months are published and closed, thus the Audit figureAccountStatement.ClosingBalancemust be used.The older rows in the

AccountTransactiontable can be purged. Older than ten years for public money, five years otherwise, one year for hobby club systems.Of course, it is essential that any code relating to accounting systems uses genuine OLTP Standards and genuine SQL ACID Transactions (not possible in the pretend-SQL freeware).

This design incorporates all scope-level performance considerations (if this is not obvious, please ask for expansion). Scaling inside the database is a non-issue, any scaling issues that remain are actually outside database.

Corrective Advice

These items need to be stated only because incorrect advice has been provided in many SO Answers (and up-voted by the masses, democratically, of course), and the internet is chock-full of incorrect advice (amateurs love to publish their subjective "truths"):

Evidently, some people do not understand that I have given a Method in technical terms, to operate against a clear data model. As such, it is not pseudo-code for a specific application in a specific country. The Method is for capable developers, it is not detailed enough for those who need to be lead by the hand.

They also do not understand that the cut-off period of a month is an example: if your cut-off for Tax Office purposes is quarterly, then by all means, use a quarterly cut-off; if the only legal requirement you have is annual, use annual.

Even if your cut-off is quarterly for external or compliance purposes, the company may well choose a monthly cut-off, for internal Audit and sanity purposes (ie. to keep the length of the period of the state of flux to a minimum).

Eg. in Australia, the Tax Office cut-off for businesses is quarterly, but larger companies cut-off their inventory control monthly (this saves having to chase errors over a long period).

Eg. banks have legal compliance requirements monthly, therefore they perform an internal Audit on the figures, and close the books, monthly.

In primitive countries and rogue states, banks keep their state-of-flux period at the maximum, for obvious nefarious purposes. Some of them only make their compliance reports annually. That is one reason why the banks in Australia do not fail.

In the

AccountTransactiontable, do not use negative/positive in the Amount column. Money always has a positive value, there is no such thing as negative twenty dollars (or that you owe me minus fifty dollars), and then working out that the double negatives mean something else.The movement direction, or what you are going to do with the funds, is a separate and discrete fact (to the

AccountTransaction.Amount). Which requires a separate column (two facts in one datum breaks Normalisation rules, with the consequence that it introduces complexity into the code).Implement a

AccountTransactionTypereference table, the Primary Key of which is (D, W) for Deposit/Withdrawal as your starting point. As the system grows, simply add (A, a, F, w) for Adjustment Credit; Adjustment Debit; Bank Fee; ATM_Withdrawal; etc.No code changes required.

In some primitive countries, litigation requirements state that in any report that lists Transactions, a running total must be shown on every line. (Note, this is not an Audit requirement because those are superior [(refer Method above) to the court requirement; Auditors are somewhat less stupid than lawyers; etc.)

Obviously, I would not argue with a court requirement. The problem is that primitive coders translate that into: oh, oh, we must implement a

AccountTransaction.CurrentBalancecolumn. They fail to understand that:the requirement to print a column on a report is not a dictate to store a value in the database

a running total of any kind is a derived value, and it is easily coded (post a question if it isn't easy for you). Just implement the required code in the report.

implementing the running total eg.

AccountTransaction.CurrentBalanceas a column causes horrendous problems:introduces a duplicated column, because it is derivable. Breaks Normalisation. Introduces an Update Anomaly.

the Update Anomaly: whenever a Transaction is inserted historically, or a

AccountTransaction.Amountis changed, all theAccountTransaction.CurrentBalancesfrom that date to the present have to be re-computed and updated.

in the above case, the report that was filed for court use, is now obsolete (every report of online data is obsolete the moment it is printed). Ie. print; review; change the Transaction; re-print; re-review, until you are happy. It is meaningless in any case.

which is why, in less-primitive countries, the courts do not accept any old printed paper, they accept only published figures, eg. Bank Statements, which are already subject to Audit requirements (refer the Method above), and which cannot be recalled or changed and re-printed.

Comments

Alex:

yes code would be nice to look at, thank you. Even maybe a sample "bucket shop" so people could see the starting schema once and forever, would make world much better.

For the data model above.

Code • Report Current Balance

SELECT AccountNo,

ClosingDate = DATEADD( DD, -1 Date ), -- show last day of previous

ClosingBalance,

CurrentBalance = ClosingBalance + (

SELECT SUM( Amount )

FROM AccountTransaction

WHERE AccountNo = @AccountNo

AND TransactionTypeCode IN ( "A", "D" )

AND DateTime >= CONVERT( CHAR(6), GETDATE(), 2 ) + "01"

) - (

SELECT SUM( Amount )

FROM AccountTransaction

WHERE AccountNo = @AccountNo

AND TransactionTypeCode NOT IN ( "A", "D" )

AND DateTime >= CONVERT( CHAR(6), GETDATE(), 2 ) + "01"

)

FROM AccountStatement

WHERE AccountNo = @AccountNo

AND Date = CONVERT( CHAR(6), GETDATE(), 2 ) + "01"

By denormalising that transactions log I trade normal form for more convenient queries and less changes in views/materialised views when I add more tx types

God help me.

When you go against Standards, you place yourself in a third-world position, where things that are not supposed to break, that never break in first-world countries, break.

It is probably not a good idea to seek the right answer from an authority, and then argue against it, or argue for your sub-standard method.

Denormalising (here) causes an Update Anomaly, the duplicated column, that can be derived from TransactionTypeCode. You want ease of coding, but you are willing to code it in two places, rather than one. That is exactly the kind of code that is prone to errors.

A database that is fully Normalised according to Dr E F Codd's Relational Model provides for the easiest, the most logical, straight-forward code. (In my work, I contractually guarantee every report can be serviced by a single

SELECT.)ENUMis not SQL. (The freeware NONsql suites have no SQL compliance, but they do have extras which are not required in SQL.) If ever your app graduates to a commercial SQL platform, you will have to re-write all thoseENUMsas ordinary LookUp tables. With aCHAR(1)or aINTas the PK. Then you will appreciate that it is actually a table with a PK.An error has a value of zero (it also has negative consequences). A truth has a value of one. I would not trade a one for a zero. Therefore it is not a trade-off. It is just your development decision.

Does YAGNI apply to database design?

If you have good testing that hits the database, I would extend YAGNI to your database design.

In general, it is easy to add columns and tables, and less easy to remove or modify them significantly. Take that into consideration when you design tables (i.e. if a customer can have multiple users, don't add userid to your customers table. Do it right the first time).

Database Design for Revisions?

- Do not put it all in one table with an IsCurrent discriminator attribute. This just causes problems down the line, requires surrogate keys and all sorts of other problems.

- Design 2 does have problems with schema changes. If you change the Employees table you have to change the EmployeeHistories table and all the related sprocs that go with it. Potentially doubles you schema change effort.

- Design 1 works well and if done properly does not cost much in terms of a performance hit. You could use an xml schema and even indexes to get over possible performance problems. Your comment about parsing the xml is valid but you could easily create a view using xquery - which you can include in queries and join to. Something like this...

CREATE VIEW EmployeeHistory

AS

, FirstName, , DepartmentId

SELECT EmployeeId, RevisionXML.value('(/employee/FirstName)[1]', 'varchar(50)') AS FirstName,

RevisionXML.value('(/employee/LastName)[1]', 'varchar(100)') AS LastName,

RevisionXML.value('(/employee/DepartmentId)[1]', 'integer') AS DepartmentId,

FROM EmployeeHistories

Related Topics

How to Parse JSON in Oracle SQL? (Version:11.2.0)

SQL Missing Right Parenthesis on Order by Statement

What Do Column Flags Mean in MySQL Workbench

Difference Between Stored Procedures and User Defined Functions

Remove Duplicates from SQL Union

Postgresql Query for Getting N-Level Parent-Child Relation Stored in a Single Table

Why Does Microsoft SQL Server Check Columns But Not Tables in Stored Procs

SQL Like Search String Starts With

Find Groups with Matching Rows

Join Tables on Columns of Composite Foreign/Primary Key in a Query

Good Reasons Not to Use a Relational Database

SQL Server If Exists Then 1 Else 2

Select All Threads and Order by the Latest One