What's the difference between using ARAnchor to insert a node and directly insert a node?

Update: As of iOS 11.3 (aka "ARKit 1.5"), there is a difference between adding an ARAnchor to the session (and then associating SceneKit content with it through ARSCNViewDelegate callbacks) and just placing content in SceneKit space.

When you add an anchor to the session, you're telling ARKit that a certain point in world space is relevant to your app. ARKit can then do some extra work to make sure that its world coordinate space lines up accurately with the real world, at least in the vicinity of that point.

So, if you're trying to make virtual content appear "attached" to some real-world point of interest, like putting an object on a table or wall, you should see less "drift" due to world-tracking inaccuracy if you give that object an anchor than if you just place it in SceneKit space. And if that object moves from one static position to another, you'll want to remove the original anchor and add one at the new position afterward.

Additionally, in iOS 11.3 you can opt in to "relocalization", a process that helps ARKit resume a session after it gets interrupted (by a phone call, switching apps, etc). The session still works while it's trying to figure out how to map where you were before to where you are now, which might result in the world-space positions of anchors changing once relocalization succeeds.

(On the other hand, if you're just making space invaders that float in the air, perfectly matching world space isn't as important, and thus you won't really see much difference between anchor-based and non-anchor-based positioning.)

See the bit around "Use anchors to improve tracking quality around virtual objects" in Apple's Handling 3D Interaction and UI Controls in Augmented Reality article / sample code.

The rest of this answer remains historically relevant to iOS 11.0-11.2.5 and explains some context, so I'll leave it below...

Consider first the use of ARAnchor without SceneKit.

If you're using

ARSKView, you need a way to reference positions / orientations in 3D (real-world) space, because SpriteKit isn't 3D. You needARAnchorto keep track of positions in 3D so that they can get mapped into 2D.If you're building your own engine with Metal (or GL, for some strange reason)... that's not a 3D scene description API — it's a GPU programming API — so it doesn't really have a notion of world space. You can use

ARAnchoras a bridge between ARKit's notion of world space and whatever you build.

So in some cases you need ARAnchor because that's the only sensible way to refer to 3D positions. (And of course, if you're using plane detection, you need ARPlaneAnchor because ARKit will actually move those relative to scene space as it refined its estimates of where planes are.)

With ARSCNView, SceneKit already has a 3D world coordinate space, and ARKit does all the work of making that space match up to the real-world space ARKit maps out. So, given a float4x4 transform that describes a position (and orientation, etc) in world space, you can either:

- Create an

ARAnchor, add it to the session, and respond toARSCNViewDelegatecallback to provide SceneKit content for each anchor, which ARKit will add to and position in the scene for you. - Create an

SCNNode, set itssimdTransform, and add it as a child of the scene'srootNode.

As long as you have a running ARSession, there's no difference between the two approaches — they're equivalent ways to say the same thing. So if you like doing things the SceneKit way, there's nothing wrong with that. (You can even use SCNVector3 and SCNMatrix4 instead of SIMD types if you want, but you'll have to convert back and forth if you're also getting SIMD types from ARKit APIs.)

The one time these approaches differ is when the session is reset. If world tracking fails, you resume an interrupted session, and/or

you start a session over again, "world space" may no longer line up with the real world in the same way it did when you placed content in the scene.

In this case, you can have ARKit remove anchors from the session — see the run(_:options:) method and ARSession.RunOptions. (Yes, all of them, because at this point you can't trust any of them to be valid anymore.) If you placed content in the scene using anchors and delegate callbacks, ARKit will nuke all the content. (You get delegate callbacks that it's being removed.) If you placed content with SceneKit API, it stays in the scene (but most likely in the wrong place).

So, which to use sort of depends on how you want to handle session failures and interruptions (and outside of that there's no real difference).

What's the difference between ARAnchor and AnchorEntity?

Updated: July 10, 2022.

ARAnchor and AnchorEntity classes were both made for the same divine purpose – to tether 3D models to your real-world objects.

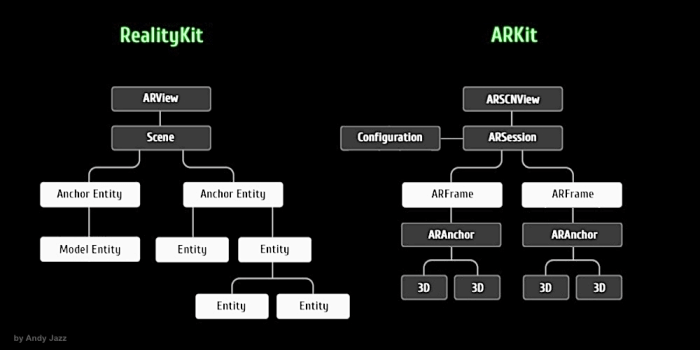

RealityKit AnchorEntity greatly extends the capabilities of ARKit ARAnchor. The most important difference between these two is that AnchorEntity automatically tracks a real world target, but ARAnchor needs session(...) instance method (or SceneKit's renderer(...) instance method) to accomplish this. Take into consideration that the collection of ARAnchors is stored in the ARSession object and the collection of AnchorEntities is stored in the Scene.

In addition, generating ARAchors requires a manual Session config, while generating AnchorEntities requires minimal developer's involvement.

Hierarchical differences:

The main advantage of RealityKit is the ability to use different AnchorEntities at the same time, such as .plane, .body or .object. There's automaticallyConfigureSession instance property in RealityKit. When enabled, the ARView automatically runs an ARSession with a config that will get updated depending on your camera mode and scene anchors. When disabled, the session needs to be run manually with your own config.

arView.automaticallyConfigureSession = true // default

In ARKit, as you know, you can run just one config in the current session: World, Body, or Geo. There is an exception in ARKit, however - you can run two configs together - FaceTracking and WorldTracking (one of them has to be a driver, and the other one – driven).

let config = ARFaceTrackingConfiguration()

config.isWorldTrackingEnabled = true

arView.session.run(config)

Apple Developer documentation says:

In RealityKit framework you use an

AnchorEntityinstance as the root of an entity hierarchy, and add it to theanchors collectionfor a Scene instance. This enables ARKit to place the anchor entity, along with all of its hierarchical descendants, into the real world. In addition to the components the anchor entity inherits from theEntityclass, the anchor entity also conforms to theHasAnchoringprotocol, giving it anAnchoringComponentinstance.

AnchorEntity has three building blocks:

- Transform component (transformation matrix containing translate, rotate and scale)

- Synchronization component (entity's synchronization data for multiuser experience)

- Anchoring component (allows choose a type of anchor –

world,bodyorimage)

All entities have Synchronization component that helps organise collaborative sessions.

AnchorEntity has nine specific anchor types for nine different purposes:

- ARAnchor

- helps implement 10 ARKit anchors, including ARGeoAnchor and ARAppClipCodeAnchor

- body

- camera

- face

- image

- object

- plane

- world

- raycastResult

You can simultaneously use both classes ARAnchor and AnchorEntity in your app. Or you can use just AnchorEntity class because it's all-sufficient one.

For additional info about

ARAnchorandAnchorEntity, please look at THIS POST.

ARAnchor for SCNNode

If you have a look at the Apple Docs it states that:

To track the positions and orientations of real or virtual objects

relative to the camera, create anchor objects and use the add(anchor:)

method to add them to your AR session.

As such, I think that since your aren't using PlaneDetection you would need to create an ARAnchor manually if it is needed:

Whenever you place a virtual object, always add an ARAnchor representing its position and orientation to the ARSession. After moving a virtual object, remove the anchor at the old position and create a new anchor at the new position. Adding an anchor tells ARKit that a position is important, improving world tracking quality in that area and helping virtual objects appear to stay in place relative to real-world surfaces.

You can read more about this in the following thread What's the difference between using ARAnchor to insert a node and directly insert a node?

Anyway, in order to get you started I began by creating an SCNNode called currentNode:

var currentNode: SCNNode?

Then using a UITapGestureRecognizer I created an ARAnchor manually at the touchLocation:

@objc func handleTap(_ gesture: UITapGestureRecognizer){

//1. Get The Current Touch Location

let currentTouchLocation = gesture.location(in: self.augmentedRealityView)

//2. If We Have Hit A Feature Point Get The Result

if let hitTest = augmentedRealityView.hitTest(currentTouchLocation, types: [.featurePoint]).last {

//2. Create An Anchore At The World Transform

let anchor = ARAnchor(transform: hitTest.worldTransform)

//3. Add It To The Scene

augmentedRealitySession.add(anchor: anchor)

}

}

Having added the anchor, I then used the ARSCNViewDelegate callback to create the currentNode like so:

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

if currentNode == nil{

currentNode = SCNNode()

let nodeGeometry = SCNBox(width: 0.2, height: 0.2, length: 0.2, chamferRadius: 0)

nodeGeometry.firstMaterial?.diffuse.contents = UIColor.cyan

currentNode?.geometry = nodeGeometry

currentNode?.position = SCNVector3(anchor.transform.columns.3.x, anchor.transform.columns.3.y, anchor.transform.columns.3.z)

node.addChildNode(currentNode!)

}

}

In order to test that it works, e.g being able to log the corresponding ARAnchor, I changed the tapGesture method to include this at the end:

if let anchorHitTest = augmentedRealityView.hitTest(currentTouchLocation, options: nil).first,{

print(augmentedRealityView.anchor(for: anchorHitTest.node))

}

Which in my ConsoleLog prints:

Optional(<ARAnchor: 0x1c0535680 identifier="23CFF447-68E9-451D-A64D-17C972EB5F4B" transform=<translation=(-0.006610 -0.095542 -0.357221) rotation=(-0.00° 0.00° 0.00°)>>)

Hope it helps...

How to make an SCNNode facing toward ARAnchor

I've noticed that the SCNLookAtConstraint works when the orientation of my phone is parallel to the horizon. (screen facing upward)

And this line of code does the magic !! guideNode.pivot = SCNMatrix4Rotate(guideNode.pivot, Float.pi, 0, 1, 1)

If you're interested, see here.

public func showDirection(of object: arItemList) {

guard let pointOfView = sceneView.pointOfView else { return }

// remove previous instruction

for node in pointOfView.childNodes {

node.removeFromParentNode()

}

// target

let desNode = SCNNode()

let targetPoint = SCNVector3.positionFromTransform(object.1.transform)

desNode.worldPosition = targetPoint

// guide

let startPoint = SCNVector3(0, 0 , -1.0)

let guideNode = loadObject()

guideNode.scale = SCNVector3(0.7, 0.7, 0.7)

guideNode.position = startPoint

let lookAtConstraints = SCNLookAtConstraint(target: desNode)

lookAtConstraints.isGimbalLockEnabled = true

// Here's the magic

guideNode.pivot = SCNMatrix4Rotate(guideNode.pivot, Float.pi, 0, 1, 1)

guideNode.constraints = [lookAtConstraints]

pointOfView.addChildNode(guideNode)

}

What is ARAnchor exactly?

Updated: July 10, 2022.

TL;DR

ARAnchor

ARAnchor is an invisible null-object that holds a 3D model at anchor's position. Think of ARAnchor just like it's a parent transform node with local axis (you can translate, rotate and scale it). Every 3D model has a pivot point, right? So this pivot point must meet an ARAnchor in ARKit.

If you're not using anchors in ARKit app (in RealityKit it's impossible not to use anchors because they are part of a scene), your 3D models may drift from where they were placed, and this will dramatically impact app’s realism and user experience. Thus, anchors are crucial elements of any AR scene.

According to ARKit 2017 documentation:

ARAnchoris a real-world position and orientation that can be used for placing objects in AR Scene. Adding an anchor to the session helps ARKit to optimize world-tracking accuracy in the area around that anchor, so that virtual objects appear to stay in place relative to the real world. If a virtual object moves, remove the corresponding anchor from the old position and add one at the new position.

ARAnchor is a parent class of other 10 anchors' types in ARKit, hence all those subclasses inherit from ARAnchor. Usually you do not use ARAnchor directly. I must also say that ARAnchor and Feature Points have nothing in common. Feature Points are rather special visual elements for tracking and debugging.

ARAnchor doesn't automatically track a real world target. When you need automation, you have to use renderer() or session() instance methods that can be implemented in case you comformed to ARSCNViewDelegate or ARSessionDelegate protocols, respectively.

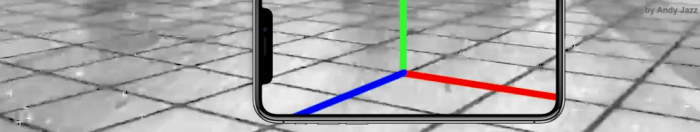

Here's an image with visual representation of plane anchor. Keep in mind: you can neither see a detected plane nor its corresponding ARPlaneAnchor, by default. So, if want to see the anchor in your scene, you may "visualize" it using three thin SCNCylinder primitives. Each color of the cylinder represents a particular axis: so RGB is XYZ.

In ARKit you can automatically add ARAnchors to your scene using different scenarios:

ARPlaneAnchor

- If horizontal and/or vertical

planeDetectioninstance property isON, ARKit is able to add ARPlaneAnchors in the running session. Sometimes enabledplaneDetectionconsiderably increases a time required for scene understanding stage.

- If horizontal and/or vertical

ARImageAnchor (conforms to

ARTrackableprotocol)- This type of anchors contains information about a position, orientation and scale of a detected image (anchor is placed at image's center) on world-tracking or image-tracking configuration. To activate image tracking, use

detectionImagesinstance property. In ARKit 2.0 you can totally track up to 25 images, in ARKit 3.0 / 4.0 – up to 100 images, respectively. But, in both cases, not more than just 4 images simultaneously. However, it was promised, that in ARKit 5.0 / 6.0, you can detect and track up to 100 images at a time (but it's still not implemented yet).

- This type of anchors contains information about a position, orientation and scale of a detected image (anchor is placed at image's center) on world-tracking or image-tracking configuration. To activate image tracking, use

ARBodyAnchor (conforms to

ARTrackableprotocol)- You can enable body tracking by running a session based on

ARBodyTrackingConfig(). You'll get ARBodyAnchor at aRoot Jointof CG Skeleton or, in other words, at pelvis position of a tracked character.

- You can enable body tracking by running a session based on

ARFaceAnchor (conforms to

ARTrackableprotocol)- Face Anchor stores information about head's topology, pose and face expression. You can track

ARFaceAnchorwith a help of the front TrueDepth camera. When face is detected, Face Anchor will be attached slightly behind a nose, in the center of a face. In ARKit 2.0 you can track just one face, in ARKit 3.0 and higher – up to 3 faces, simultaneously. However, the number of tracked faces depends on presence of a TrueDepth sensor and processor version: gadgets with TrueDepth camera can track up to 3 faces, gadgets with A12+ chipset, but without TrueDepth camera, can also track up to 3 faces.

- Face Anchor stores information about head's topology, pose and face expression. You can track

ARObjectAnchor

- This anchor's type keeps an information about 6 Degrees of Freedom (position and orientation) of a real-world 3D object detected in a world-tracking session. Remember that you need to specify

ARReferenceObjectinstances fordetectionObjectsproperty of session config.

- This anchor's type keeps an information about 6 Degrees of Freedom (position and orientation) of a real-world 3D object detected in a world-tracking session. Remember that you need to specify

AREnvironmentProbeAnchor

- Probe Anchor provides environmental lighting information for a specific area of space in a world-tracking session. ARKit's Artificial Intelligence uses it to supply reflective shaders with environmental reflections.

ARParticipantAnchor

- This is an indispensable anchor type for multiuser AR experiences. If you want to employ it, use

truevalue forisCollaborationEnabledproperty in ARWorldTrackingConfig. Then importMultipeerConnectivityframework.

- This is an indispensable anchor type for multiuser AR experiences. If you want to employ it, use

ARMeshAnchor

- ARKit and LiDAR subdivide the reconstructed real-world scene surrounding the user into mesh anchors with corresponding polygonal geometry. Mesh anchors constantly update their data as ARKit refines its understanding of the real world. Although ARKit updates a mesh to reflect a change in the physical environment, the mesh's subsequent change is not intended to reflect in real time. Sometimes your reconstructed scene can have up to

30-40 anchorsor even more. This is due to the fact that each classified object (wall, chair, door or table) has its own personal anchor. Each ARMeshAnchor stores data about corresponding vertices, one of eight cases of classification, its faces and vertices' normals.

- ARKit and LiDAR subdivide the reconstructed real-world scene surrounding the user into mesh anchors with corresponding polygonal geometry. Mesh anchors constantly update their data as ARKit refines its understanding of the real world. Although ARKit updates a mesh to reflect a change in the physical environment, the mesh's subsequent change is not intended to reflect in real time. Sometimes your reconstructed scene can have up to

ARGeoAnchor (conforms to

ARTrackableprotocol)- In ARKit 4.0+ there's a geo anchor (a.k.a. location anchor) that tracks a geographic location using GPS, Apple Maps and additional environment data coming from Apple servers. This type of anchor identifies a specific area in the world that the app can refer to. When a user moves around the scene, the session updates a location anchor’s transform based on coordinates and device’s compass heading of a geo anchor. Look at the list of supported cities.

ARAppClipCodeAnchor (conforms to

ARTrackableprotocol)- This anchor tracks the position and orientation of App Clip Code in the physical environment in ARKit 4.0+. You can use App Clip Codes to enable users to discover your App Clip in the real world. There are NFC-integrated App Clip Code and scan-only App Clip Code.

There are also other regular approaches to create anchors in AR session:

Hit-Testing methods

- Tapping on the screen, projects a point onto a invisible detected plane, placing ARAnchor on a location where imaginary ray intersects with this plane. By the way,

ARHitTestResultclass and its corresponding hit-testing methods for ARSCNView and ARSKView will be deprecated in iOS 14, so you have to get used to a Ray-Casting.

- Tapping on the screen, projects a point onto a invisible detected plane, placing ARAnchor on a location where imaginary ray intersects with this plane. By the way,

Ray-Casting methods

- If you're using ray-casting, tapping on the screen results in a projected 3D point on an invisible detected plane. But you can also perform Ray-Casting between A and B positions in 3D scene. So, ray-casting can be 2D-to-3D and 3D-to-3D. When using the Tracked Ray-Casting, ARKit can keep refining the ray-cast as it learns more and more about detected surfaces.

Feature Points

- Special yellow points that ARKit automatically generates on a high-contrast margins of real-world objects, can give you a place to put an ARAnchor on.

ARCamera's transform

- iPhone's or iPad's camera position and orientation simd_float4x4 can be easily used as a place for ARAnchor.

Any arbitrary World Position

- Place a custom ARWorldAnchor anywhere in your scene. You can generate ARKit's version of

world anchorlikeAnchorEntity(.world(transform: mtx))found in RealityKit.

- Place a custom ARWorldAnchor anywhere in your scene. You can generate ARKit's version of

This code snippet shows you how to use an ARPlaneAnchor in a delegate's method: renderer(_:didAdd:for:):

func renderer(_ renderer: SCNSceneRenderer,

didAdd node: SCNNode,

for anchor: ARAnchor) {

guard let planeAnchor = anchor as? ARPlaneAnchor

else { return }

let grid = Grid(anchor: planeAnchor)

node.addChildNode(grid)

}

AnchorEntity

AnchorEntity is alpha and omega in RealityKit. According to RealityKit documentation 2019:

AnchorEntityis an anchor that tethers virtual content to a real-world object in an AR session.

RealityKit framework and Reality Composer app were announced at WWDC'19. They have a new class named AnchorEntity. You can use AnchorEntity as the root point of any entities' hierarchy, and you must add it to the Scene anchors collection. AnchorEntity automatically tracks real world target. In RealityKit and Reality Composer AnchorEntity is at the top of hierarchy. This anchor is able to hold a hundred of models and in this case it's more stable than if you use 100 personal anchors for each model.

Let's see how it looks in a code:

func makeUIView(context: Context) -> ARView {

let arView = ARView(frame: .zero)

let modelAnchor = try! Experience.loadModel()

arView.scene.anchors.append(modelAnchor)

return arView

}

AnchorEntity has three components:

- Anchoring component

- Transform component

- Synchronization component

To find out the difference between

ARAnchorandAnchorEntitylook at THIS POST.

Here are nine AnchorEntity's cases available in RealityKit 2.0 for iOS:

// Fixed position in the AR scene

AnchorEntity(.world(transform: mtx))

// For body tracking (a.k.a. Motion Capture)

AnchorEntity(.body)

// Pinned to the tracking camera

AnchorEntity(.camera)

// For face tracking (Selfie Camera config)

AnchorEntity(.face)

// For image tracking config

AnchorEntity(.image(group: "GroupName", name: "forModel"))

// For object tracking config

AnchorEntity(.object(group: "GroupName", name: "forObject"))

// For plane detection with surface classification

AnchorEntity(.plane([.any], classification: [.seat], minimumBounds: [1, 1]))

// When you use ray-casting

AnchorEntity(raycastResult: myRaycastResult)

// When you use ARAnchor with a given identifier

AnchorEntity(.anchor(identifier: uuid))

// Creates anchor entity on a basis of ARAnchor

AnchorEntity(anchor: arAnchor)

And here are only two AnchorEntity's cases available in RealityKit 2.0 for macOS:

// Fixed world position in VR scene

AnchorEntity(.world(transform: mtx))

// Camera transform

AnchorEntity(.camera)

Also it’s not superfluous to say that you can use any subclass of

ARAnchorforAnchorEntityneeds:

func session(_ session: ARSession, didUpdate anchors: [ARAnchor]) {

guard let faceAnchor = anchors.first as? ARFaceAnchor

else { return }

arView.session.add(anchor: faceAnchor)

self.anchor = AnchorEntity(anchor: faceAnchor)

anchor.addChild(model)

arView.scene.anchors.append(self.anchor)

}

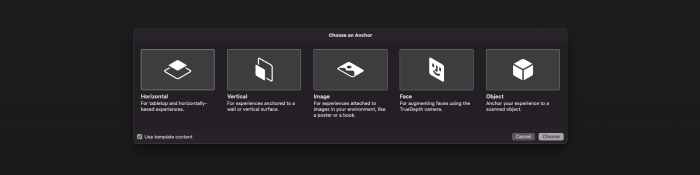

Reality Composer's anchors:

At the moment (February 2022) Reality Composer has just 4 types of AnchorEntities:

// 1a

AnchorEntity(plane: .horizontal)

// 1b

AnchorEntity(plane: .vertical)

// 2

AnchorEntity(.image(group: "GroupName", name: "forModel"))

// 3

AnchorEntity(.face)

// 4

AnchorEntity(.object(group: "GroupName", name: "forObject"))

AR USD Schemas

And of course, I should say a few words about preliminary anchors. There are 3 preliminary anchoring types (July 2022) for those who prefer Python scripting for USDZ models – these are plane, image and face preliminary anchors. Look at this code snippet to find out how to implement a schema pythonically.

def Cube "ImageAnchoredBox"(prepend apiSchemas = ["Preliminary_AnchoringAPI"])

{

uniform token preliminary:anchoring:type = "image"

rel preliminary: imageAnchoring:referenceImage = <ImageReference>

def Preliminary_ReferenceImage "ImageReference"

{

uniform asset image = @somePicture.jpg@

uniform double physicalWidth = 45

}

}

If you want to know more about AR USD Schemas, read this story on Meduim.

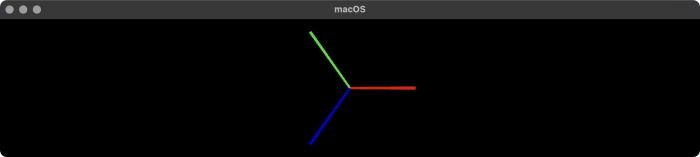

Visualizing AnchorEntity

Here's an example of how to visualize anchors in RealityKit (mac version).

import AppKit

import RealityKit

class ViewController: NSViewController {

@IBOutlet var arView: ARView!

var model = Entity()

let anchor = AnchorEntity()

fileprivate func visualAnchor() -> Entity {

let colors: [SimpleMaterial.Color] = [.red, .green, .blue]

for index in 0...2 {

let box: MeshResource = .generateBox(size: [0.20, 0.005, 0.005])

let material = UnlitMaterial(color: colors[index])

let entity = ModelEntity(mesh: box, materials: [material])

if index == 0 {

entity.position.x += 0.1

} else if index == 1 {

entity.transform = Transform(pitch: 0, yaw: 0, roll: .pi/2)

entity.position.y += 0.1

} else if index == 2 {

entity.transform = Transform(pitch: 0, yaw: -.pi/2, roll: 0)

entity.position.z += 0.1

}

model.scale *= 1.5

self.model.addChild(entity)

}

return self.model

}

override func awakeFromNib() {

anchor.addChild(self.visualAnchor())

arView.scene.addAnchor(anchor)

}

}

Distinguish between multiple tracked images at the same time?

You can easily do it using such instance properties as referenceImage and name.

// The detected image referenced by the image anchor.

var referenceImage: ARReferenceImage { get }

and:

// A descriptive name for your reference image.

var name: String? { get set }

Here's how they look like in code:

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

guard let imageAnchor = anchor as? ARImageAnchor,

let _ = imageAnchor.referenceImage.name

else { return }

anchorsArray.append(imageAnchor)

if imageAnchor.referenceImage.name == "apple" {

print("Image with apple is successfully detected...")

}

}

Difference between renderer and session methods in ARKit

Both renderer(_:didUpdate:for:) and session(_:didUpdate:) instance methods update AR content (camera/model position or some specific data like face expressions) at 60 fps. They work almost identically but they have different purposes. For five renderer(...) instance methods you have to implement ARSCNViewDelegate protocol. For four session(...) instance methods you have to implement ARSessionDelegate protocol.

An official documentation says:

ARSCNViewDelegate's methods provide the automatic synchronization of SceneKit content with an AR session. And ARSessionDelegate's methods work directly with ARFrame objects.

The main difference is:

you must use

renderer(_:didUpdate:for:)instance method for ARKit+SceneKit copula, because it works with ARSCNView object.also, you can use

session(_:didUpdate:)method for ARKit+SceneKit copula, because it also works with ARSCNView object.