When to Denormalize a Database Design

Your senior colleague is a developer, not a data modeller. You are better off starting from scratch, without them. Normalisation is complicated only to those who will not read books. It is fair enough that he makes you think, but some of the issues are absurd.

Your numbers:

You need to appreciate the differences between actual online data, and historic data; then the difference between merely historic and archival needs. All of them are right for the specific business requirement, and wrong for all others, there is no universal right and wrong.

- why is there no paper-based copy of the invoice ? In most countries that would be a legal and tax requirement, what exactly is the difficulty of fishing out the old invoice ?

- where the database has the requirement of storing the closed invoices, then sure, as soon as the invoice is closed, you need a method of capturing that information.

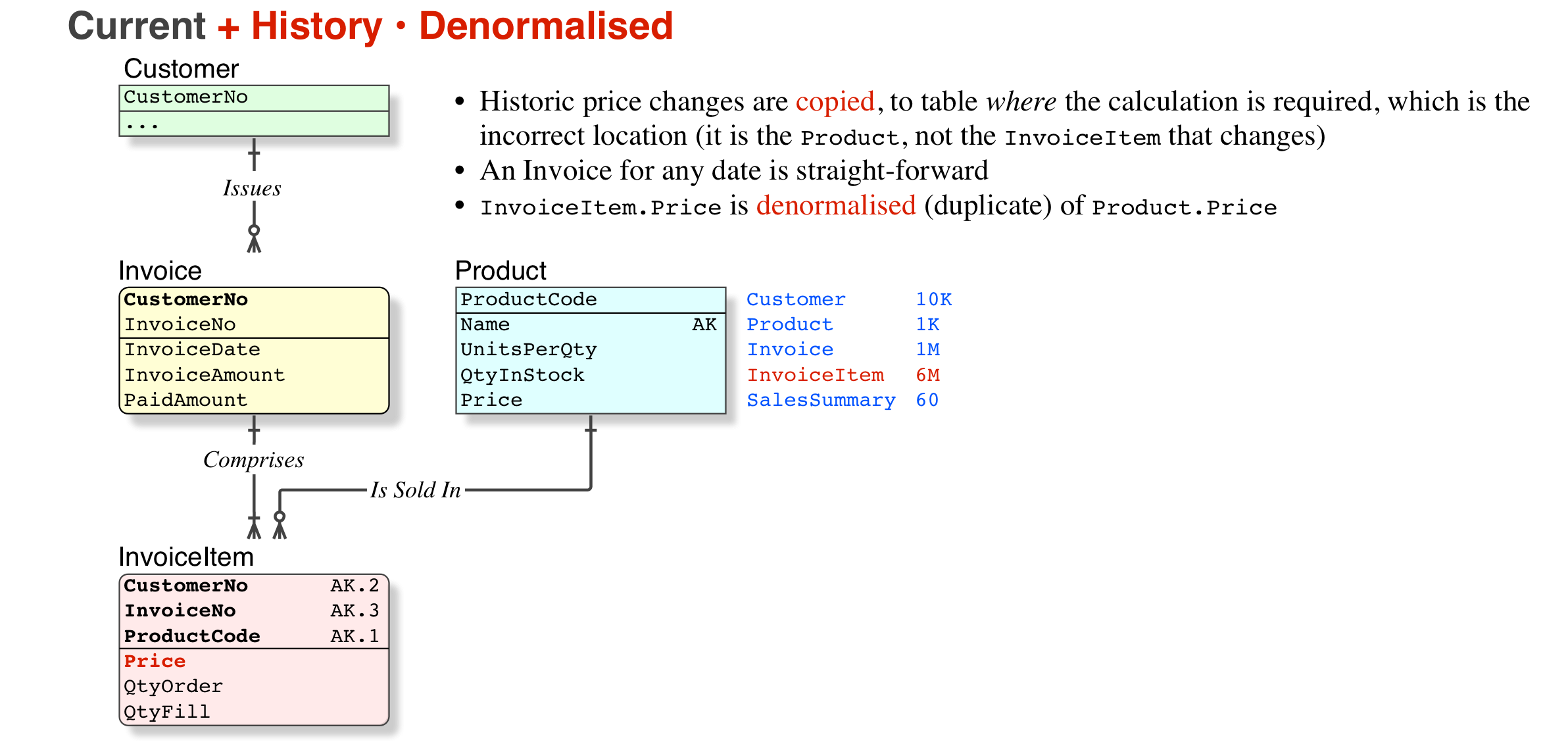

ProductPrice(actually, I would call itProductDate) is a good idea, but may not be necessary. But you are right, you need to evaluate the currency of data, in the full context of the whole database.- I cannot see how copying the product price to the invoice table would help (are not there many line items ?)

- in modern databases, where the copy of the invoice is required to be regurgitated, the closed Invoice is additionally stored in a different form, eg XML. One customer saves the PDFs as BLOBs. So there is no messing around with what the product price was five years ago. But the basic invoice data is online and current, even for closed invoices; you just cannot recalculate ancient invoice using current prices.

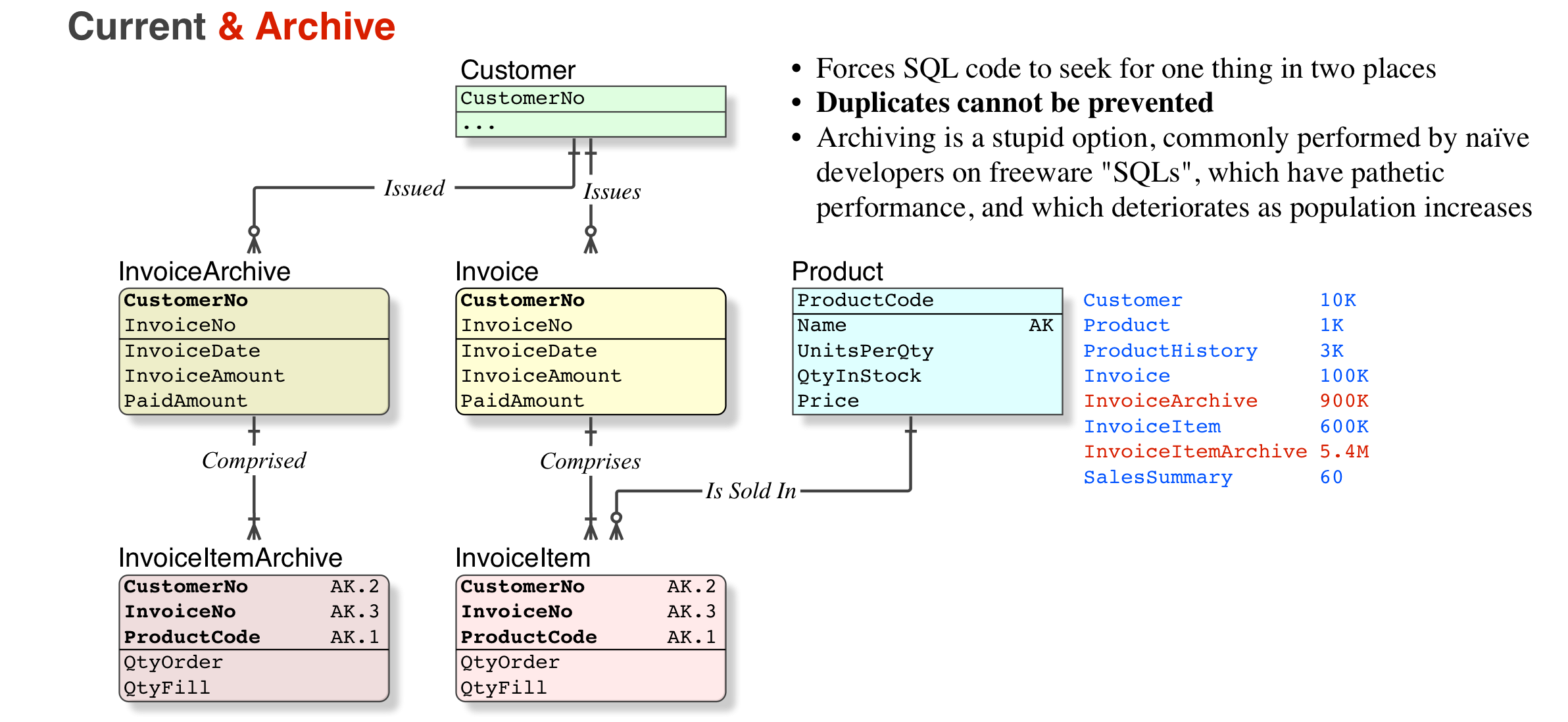

- some people use an archive_invoice table, but that has problems because now every code segment or user report tool has to look in two places (note that these days some users understand databases better than most developers)

- Anyway, that is all discussion, for your understanding. Now for the technical intent.

- The database serves current and archival purposes from the one set of tables (no "archive" tables

- Once an Invoice is created, it is a legal document, and cannot be changed or deleted (it can be reversed or partially credited by a new Invoice, with negative values). They are marked

IsIssued/IsPaid/Etc Productscannot be deleted, they can be markedIsObsolete- There are separate tables for InvoiceHeader and InvoiceItem

InvoiceItemhas FKs to bothInvoiceHeaderandProduct- for many reasons (not only those you mention), the InvoiceItem row contains the

NumUnits; ProductPrice; TaxAmount; ExtendedPrice. Sure, this looks like a "denormalisation" but it is not, because prices, taxation rates, etc, are subject to change. But more important, the legal requirement is that we can reproduce the old invoice on demand. - (where it can be reproduced from paper files, this is not required)

- the

InvoiceTotalAmountis a derived column, justSUM()of the InvoiceItems

That is rubbish. Accounting systems, and accountants do not "work" like that.

If it is a true accounting system, then it will have JournalEntries, or "double entry"; that is what a qualified account is required to use (by law).

Double Entry Accounting does not mean duplicate entries; it means every financial transaction (one amount) shall have a source account and target account that it is applied to; so there is no "denormalisation" or duplication. In a banking database, because the financial transactions are against single accounts, that is commonly rendered as two separate financial transactions (rows) within one Db Transaction. Ordinary commercial database constraints are used to ensure that there are two "sides" to every financial transaction.

Ensuring that Invoices are not deleteable is a separate issue, to do with security, etc. if anyone is paranoid about things being deleted from their database, and their database was not secured by a qualified person, then they have more and different problems that have nothing to do with this question. Obtain a security audit, and do whatever they tell you.

Wikipedia is not a reliable source of information about any technical subject, let alone Normalisation.

A Normalised database is always much faster than Un-Normalised database

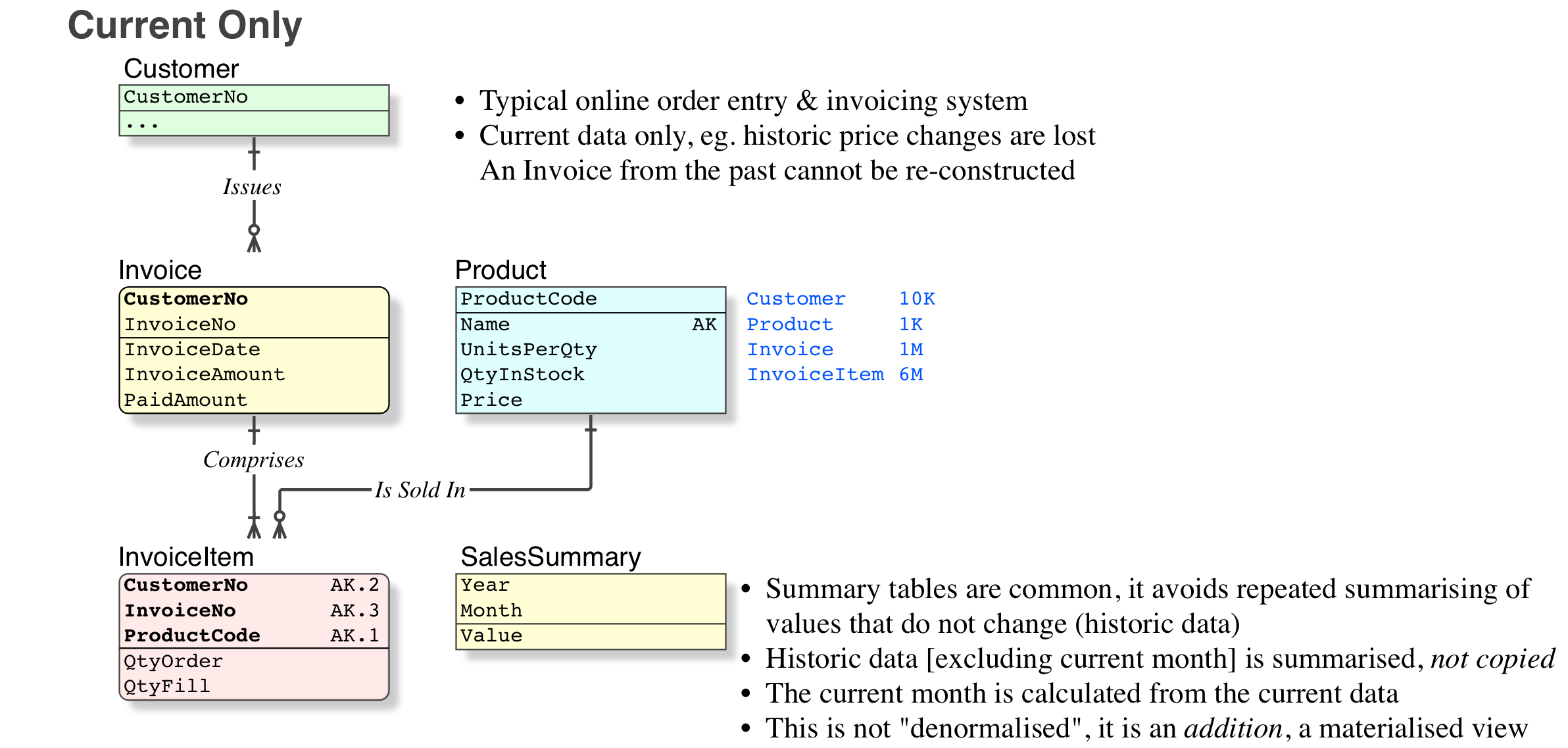

So it is very important to understand what Normalisation and Denormalisaion is, and what it isn't. The process is greatly hindered when people have fluid and amateur "definitions", it just leads to confusion and time-wasting "discussions". When you have fixed definitions, you can avoid all that, and just get on with the job.Summary tables are quite normal, to save the time and processing power, of recalculating info that does not change, eg: YTD totals for every year but this year; MTD totals for every month in this year but not this month. "Always recalculating" data is a bit silly when (a) the info is very large and (b) does not change. Calculate for the current month only

- In banking systems (millions of Trades per day), at EndOfDay, we calculate and store Daily Total as well. These are overwritten for the last five days, because Auditors are making changes, and JournalEntries against financial transactions for the last 5 days are allowed.

- non-banking systems generally do not need daily totals

Summary tables are not a "denormalisation" (except in the eyes of those who have just learned about "normalisation" from their magical, ever-changing fluid "source"; or as non-practitioners, who apply simple black-or-white rules to everything). Again, the definition is not being argued here; it simply does not apply to Summary tables.

Summary tables do not affect data integrity (assuming of course that the data that they were sourced from was integral).

Summary tables are an addition to the current data, which are not required to have the same constraints as the current data. There are essentially reporting tables or data warehouse tables, as opposed to current data tables.

There are no Update Anomalies (which is a strict definition) related to Summary tables. You cannot change or delete an invoice from last year. Update Anomalies apply to true Denormalised or Un-Normalised current data.

Response to Comments

so its ok to do denormalisation for the sakes of archiving right?

My explanation above appears not to be clear enough. Let's look at an example, and compare the options.

So, no. Archiving is a hideous option (I have corrected thousands of archived tables back to their home; corrected the indices, and restored normal performance, as well as returning the SELECT to query just one table instead of two). But if you do archive, it is not denormalised, it is worse, a copy.

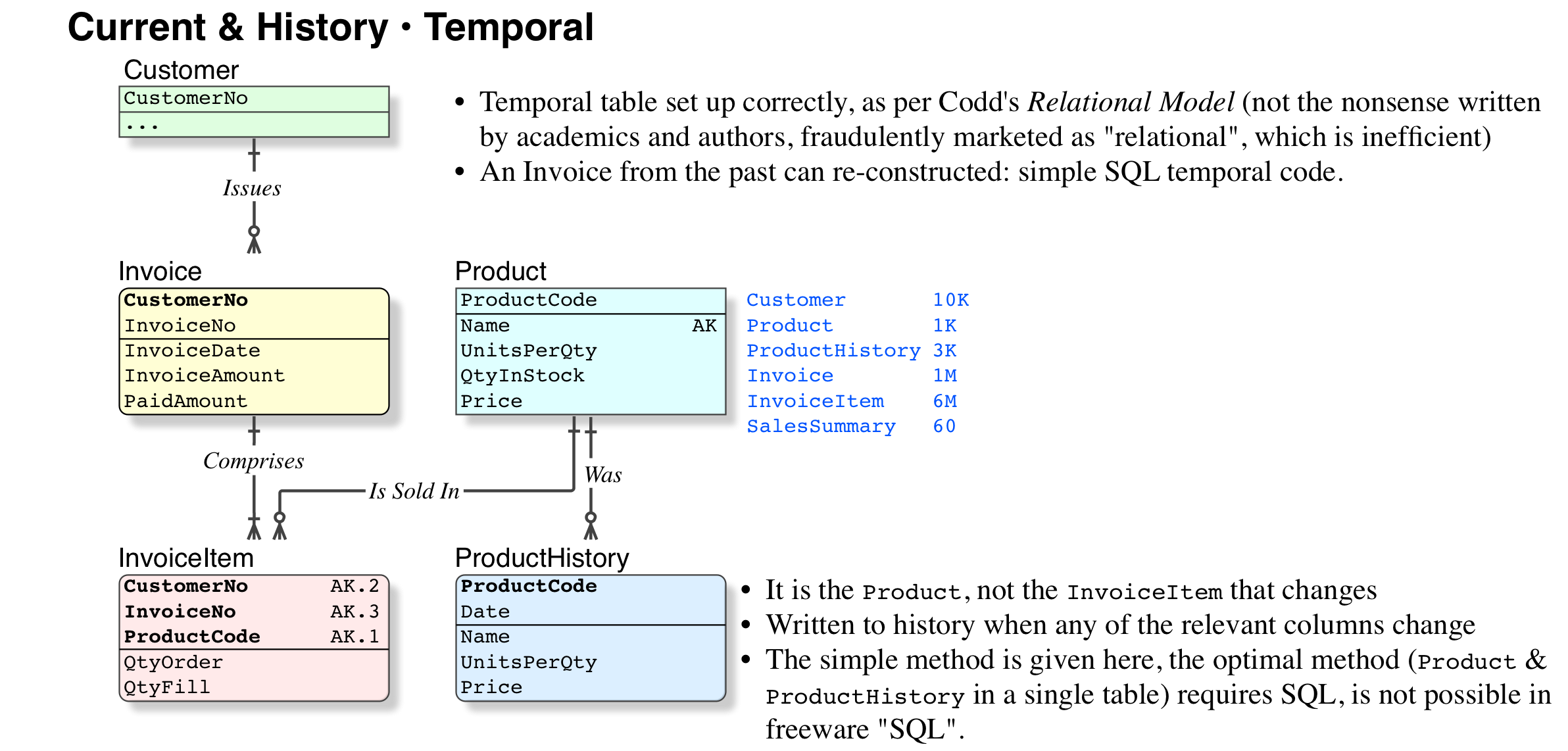

The best option by far. Again, this is the simple version, the full version requires an understanding of the temporal definition in Codd's Relational Model (not the ever-changing nonsense marketed by the detractors), and an SQL-compliant platform.

I disagree strongly with the statement that "A Normalised database is always much faster than Unnormalised database". That is patently false even if I don't harp on the use of "always". There are many scenarios in which selective, coherent denormalization of a database can result in extreme performance improvement.

Example [of justified De-Normalisation]: a complex join for a date-limited data set, like a month-end summary for a complex business. If the data is collected over the course of a month; then forever stable; and queried often — it can make sense to pre-compute via a materialized view, trigger, or more sophisticated method.

Evidently we need clear definitions.

Normalisation

- Purpose

to eliminate (not merely reduce) duplicate data

Note that there are no complex joins, just ordinary simple joins. - Method

Formal Relational data modelling

(not stepping through the NFs by number).

- Purpose

Un-Normalised

Failure to Normalise properly, leaving Update Anomalies in the database, and makes a mess of the TransactionsDe-Normalised

After formal Normalisation, one or more columns additionally placed in chosen tables, for performance reasons.- Eg. the pretend "SQL" does not perform the normal query with acceptable speed.

- Note that, over a period of 40 years of replacing poor databases, all databases that were declared to be De-normalised have in fact been Un-Normalised.

Summary Table/Materialised View

As detailed above in the Answer (please read again), and as illustrated in the graphic Current Only, it is an additional table to serve the purpose of providing summary values for history, that does not change. This is common.- There is no column that is duplicated, it cannot be categorised as "De-Normalisation".

You were not in disagreement with my statement [A Normalised database is always much faster than Unnormalised database], which relates to Normalised vs Un-Normalised, you were categorising the summary table incorrectly, as "de-Normalised".

If you can know in advance what complex, time-consuming queries a database will receive, you can precompute the results of those queries -- for example, replacing a 14-table join with a table that already contains the needed data.

That is different again. The two common reasons for that are:

- the database isn't Normalised in the first place, so queries on large tables are slow

- the proper cure is to Normalise properly

- your pretend "SQL" is so slow

- the proper cure for that is to obtain a genuine SQL Platform.

Such that you need to build an additional file (or Materialised View) to service the slow queries. Yes, that is de-Normalising, and on a grand scale, but it is worse, it is a 100% copy of those fields.

In what way does denormalization improve database performance?

Denormalization is a time-space trade-off. Normalized data takes less space, but may require join to construct the desired result set, hence more time. If it's denormalized, data are replicated in several places. It then takes more space, but the desired view of the data is readily available.

There are other time-space optimizations, such as

- denormalized view

- precomputed columns

As with any of such approach, this improves reading data (because they are readily available), but updating data becomes more costly (because you need to update the replicated or precomputed data).

(De)Normalization of two relations

Assuming AB is a key in r1 and A is a key in r2 then it seems that the schema is in 6NF. The Relational Database Dictionary (Date) defines denormalization as:

Replacing a set of relvars R1, R2, . .

., Rn by their join R, such that for

all i the projection of R on the

attributes of Ri is guaranteed to be

equal to Ri (i = 1, 2, . . ., n).

Fundamentally, normalization/denormalization is about composition and nonloss decomposition using projection and join operators. In this example you have redundancy caused by a different operator: summation. I expect it would be quite possible in principle to form a theory of "normalization" for operators other than projection and join, perhaps even for non-relational functions like summation. That's not how normalization is conventionally defined however and in the absence of any sound basis for doing otherwise I think we ought to apply the technical meaning denormalization as defined by Date in the above quotation.

MySQL Normalize or Denormalize

Generally speaking you can divide a database into two broad categories:

OLTP Systems

Online Transaction Processing Systems are normally write intensive i.e. a lot of updates compared to the reads of the data. This system is typically a day to day application used by a business users of all scopes i.e. data capture, admin etc. These databases are usually normalized to the extreme and then certain demoralized for performance gains in certain areas.

OLAP/DSS system:

On Line Analytic Processing are database that are normally large data warehouse like systems. Used to support Analytic activities such as data mining, data cubes etc. Typically the information is used by a more limited set of users than OLTP. These database are normally very denormalised.

Go read here for a short description of these and the main differences.

OLTP VS OLAP

Regarding your INSERT/UPDATE/DELETE point go read about the MySQL ON DUPLICATE KEY UPDATE statement which will resolve that issue for you easily. It is called a MERGE operation in most database systems.

Now I dont understand why you are worried about JOINS. I have had tables with millions (500 000 000+) rows that I joined with other tables also large in size and the queries ran very fast. So designing a database to eliminate joins is NOT a good idea.

My suggestion is:

If designing a OLTP system normalise as much as possible then denormalise to increase performance where needed. For A OLAP system look at star schemas etc and dont even bother with normalizing it first. Oh by the way most of the OLAP systems normally use a OLTP system as a data source.

How does data denormalization work with the Microservice Pattern?

This is subjective but the following solution worked for me, my team, and our DB team.

- At the application layer, Microservices are decomposed to semantic function.

- e.g. a

Contactservice might CRUD contacts (metadata about contacts: names, phone numbers, contact info, etc.) - e.g. a

Userservice might CRUD users with login credentials, authorization roles, etc. - e.g. a

Paymentservice might CRUD payments and work under the hood with a 3rd party PCI compliant service like Stripe, etc.

- e.g. a

- At the DB layer, the tables can be organized however the devs/DBs/devops people want the tables organized

The problem is with cascading and service boundaries: Payments might need a User to know who is making a payment. Instead of modeling your services like this:

interface PaymentService {

PaymentInfo makePayment(User user, Payment payment);

}

Model it like so:

interface PaymentService {

PaymentInfo makePayment(Long userId, Payment payment);

}

This way, entities that belong to other microservices only are referenced inside a particular service by ID, not by object reference. This allows DB tables to have foreign keys all over the place, but at the app layer "foreign" entities (that is, entities living in other services) are available via ID. This stops object cascading from growing out of control and cleanly delineates service boundaries.

The problem it does incur is that it requires more network calls. For instance, if I gave each Payment entity a User reference, I could get the user for a particular payment with a single call:

User user = paymentService.getUserForPayment(payment);

But using what I'm suggesting here, you'll need two calls:

Long userId = paymentService.getPayment(payment).getUserId();

User user = userService.getUserById(userId);

This may be a deal breaker. But if you're smart and implement caching, and implement well engineered microservices that respond in 50 - 100 ms each call, I have no doubt that these extra network calls can be crafted to not incur latency to the application.

Denormalization: How much is too much?

Designing a schema for cassandra is very different than designing a schema for a sql database. With a sql database your data fits on one machine, the database will maintain indexes for you, you can perform joins, and you can do complex queries with sql. These all make normalizing data practical.

In cassandra you data does not fit on one machine so you can't perform joins, the only query you can do efficiently is get a range of columns on a key, and cassandra will only maintain limited indexes for you. This makes normalizing your data impractical.

In cassandra, you typically design your schema to serve the queries that you are going to make, and you denormalize to do that. My favorite example of this is what twitter does for their stats for rainbird as explained in this post,

For example, say someone clicks on a t.co link to blog.example.com/foo at 11:41am on 1st Feb.

Rainbird would increment counters for:

t.co click: com (all time)

t.co click: com.example (all time)

t.co click: com.example.blog (all time)

t.co click: com.example.blog /foo (all time)

t.co click: com (1st Feb 2011)

t.co click: com.example (1st Feb 2011)

t.co click: com.example.blog (1st Feb 2011)

t.co click: com.example.blog /foo (1st Feb 2011)

t.co click: com (11am-12 on 1st Feb)

t.co click: com.example (11am-12 on 1st Feb)

t.co click: com.example.blog (11am-12 on 1st Feb)

t.co click: com.example.blog /foo (11am-12 on 1st Feb)

t.co click: com (11:41-42 on 1st Feb)

t.co click: com.example (11:41-42 on 1st Feb)

t.co click: com.example.blog (11:41-42 on 1st Feb)

t.co click: com.example.blog /foo (11:41-42 on 1st Feb)

This 1 click is copied 16 times to satisfy the 16 queries that can be done.

This is a good presentation on how to do indexing in cassandra.

Related Topics

How to Sort a 'Version Number' Column Generically Using a SQL Server Query

Postgresql Wildcard Like for Any of a List of Words

Is There Auto Increment in SQLite

Extracting Hours from a Datetime (SQL Server 2005)

Why Can't You Mix Aggregate Values and Non-Aggregate Values in a Single Select

SQL Server 2005 Row_Number() Without Order By

Alternate of Lead Lag Function in SQL Server 2008

Does Oracle Store Trailing Zeroes for Number Data Type

Select a Random Sample of Results from a Query Result

How to Compare the Current Row with Next and Previous Row in Postgresql

Oracle - How to Create a Materialized View with Fast Refresh and Joins

Find Records Where Join Doesn't Exist

Why Are Logical Reads for Windowed Aggregate Functions So High

SQL Group By, Top N Items for Each Group

Generating Dates Between Two Dates