How do you optimize tables for specific queries?

This is a nice question, if rather broad (and none the worse for that).

If I understand you, then you're asking how to attack the problem of optimisation starting from scratch.

The first question to ask is: "is there a performance problem?"

If there is no problem, then you're done. This is often the case. Nice.

On the other hand...

Determine Frequent Queries

Logging will get you your frequent queries.

If you're using some kind of data access layer, then it might be simple to add code to log all queries.

It is also a good idea to log when the query was executed and how long each query takes. This can give you an idea of where the problems are.

Also, ask the users which bits annoy them. If a slow response doesn't annoy the user, then it doesn't matter.

Select the optimization factors?

(I may be misunderstanding this part of the question)

You're looking for any patterns in the queries / response times.

These will typically be queries over large tables or queries which join many tables in a single query. ... but if you log response times, you can be guided by those.

Types of changes one can make?

You're specifically asking about optimising tables.

Here are some of the things you can look for:

- Denormalisation. This brings several tables together into one wider table, so in stead of your query joining several tables together, you can just read one table. This is a very common and powerful technique. NB. I advise keeping the original normalised tables and building the denormalised table in addition - this way, you're not throwing anything away. How you keep it up to date is another question. You might use triggers on the underlying tables, or run a refresh process periodically.

- Normalisation. This is not often considered to be an optimisation process, but it is in 2 cases:

- updates. Normalisation makes updates much faster because each update is the smallest it can be (you are updating the smallest - in terms of columns and rows - possible table. This is almost the very definition of normalisation.

- Querying a denormalised table to get information which exists on a much smaller (fewer rows) table may be causing a problem. In this case, store the normalised table as well as the denormalised one (see above).

- Horizontal partitionning. This means making tables smaller by putting some rows in another, identical table. A common use case is to have all of this month's rows in table ThisMonthSales, and all older rows in table OldSales, where both tables have an identical schema. If most queries are for recent data, this strategy can mean that 99% of all queries are only looking at 1% of the data - a huge performance win.

- Vertical partitionning. This is Chopping fields off a table and putting them in a new table which is joinned back to the main table by the primary key. This can be useful for very wide tables (e.g. with dozens of fields), and may possibly help if tables are sparsely populated.

- Indeces. I'm not sure if your quesion covers these, but there are plenty of other answers on SO concerning the use of indeces. A good way to find a case for an index is: find a slow query. look at the query plan and find a table scan. Index fields on that table so as to remove the table scan. I can write more on this if required - leave a comment.

You might also like my post on this.

Simple SQL query but huge table - how to optimize?

For this query, you want the following index:

create index myindex on mytable(year, goal_id, target)

This gives you a covering index: all columns that come into play in the query are part of the index, so this gives the database a decent chance to execute the query by looking at the index only (without actually looking at the data).

The ordering of columns in the index is important: the first two columns correspond to the where predicates, and the last column is the column comes into play in the select clause.

Depending on the cardinality of your data, you might also want to try to invert the first two columns:

create index myindex on mytable(goal_id, year, target)

The base idea is that you want to put the more restrictive criteria first.

MySQL OPTIMIZE all tables?

You can use mysqlcheck to do this at the command line.

One database:

mysqlcheck -o <db_schema_name>

All databases:

mysqlcheck -o --all-databases

How to optimize MySQL queries with many combinations of where conditions?

Well, the file (it is not a table) is not at all Normalised. Therefore no amount indices on combinations of fields will help the queries.

Second, MySQL is (a) not compliant with the SQL requirement, and (b) it does not have a Server Architecture or the features of one.

- Such a Statistics, which is used by a genuine Query Optimiser, which commercial SQL platforms have. The "single index" issue you raise in the comments does not apply.

Therefore, while we can fix up the table, etc, you may never obtain the performance that you seek from the freeware.

Eg. in the commercial world, 6M rows is nothing, we worry when we get to a billion rows.

Eg. Statistics is automatic, we have to tweak it only when necessary: an un-normalised table or billions of rows.

Or ... should I use other middlewares , such as Elasticsearch ?

It depends on the use of genuine SQL vs MySQL, and the middleware.

If you fix up the file and make a set of Relational tables, the queries are then quite simple, and fast. It does not justify a middleware search engine (that builds a data cube on the client system).

If they are not fast on MySQL, then the first recommendation would be to get a commercial SQL platform instead of the freeware.

The last option, the very last, is to stick to the freeware and add a big fat middleware search engine to compensate.

Or is it good to create 10 tables which have data of category_1~10, and execute many INNER JOIN in the queries?

Yes. JOINs are quite ordinary in SQL. Contrary to popular mythology, a normalised database, which means many more tables than an un-normalised one, causes fewer JOINs, not more JOINs.

So, yes, Normalise that beast. Ten tables is the starting perception, still not at all Normalised. One table for each of the following would be a step in the direction of Normalised:

Item

Item_idwill be unique.Category

This is notcategory-1, etc, but each of the values that are incategory_1, etc. You must not have multiple values in a single column, it breaks 1NF. Such values will be (a) Atomic, and (b) unique. The Relational Model demands that the rows are unique.The meaning of

category_1, etc inItemis not given. (If you provide some example data, I can improve the accuracy of the data model.) Obviously it is not [2].

.

If it is a Priority (1..10), or something similar, that the users have chosen or voted on, this table will be a table that supplies the many-to-many relationship betweenItemandCategory, with aPriorityfor each row.

.

Let's call it Poll. The relevant Predicates would be something like:

Each Poll is 1 Item

Each Poll is 1 Priority

Each Poll is 1 CategoryLikewise,

sort_scoreis not explained. If it is even remotely what it appears to be, you will not need it. Because it is a Derived Value. That you should compute on the fly: once the tables are Normalised, the SQL required to compute this is straight-forward. Not one that you compute-and-store every 5 minutes or every 10 seconds.

The Relational Model

The above maintains the scope of just answering your question, without pointing out the difficulties in your file. Noting the Relational Database tag, this section deals with the Relational errors.

The

Record IDfield (item_idorcategory_idis yours) is prohibited in the Relational Model. It is a physical pointer to a record, which is explicitly the very thing that the RM overcomes, and that is required to be overcome if one wishes to obtain the benefits of the RM, such as ease of queries, and simple, straight-forward SQL code.Conversely, the

Record IDis always one additional column and one additional index, and the SQL code required for navigation becomes complex (and buggy) very quickly. You will have enough difficulty with the code as it is, I doubt you would want the added complexity.Therefore, get rid of the

Record IDfields.The Relational Model requires that the Keys are "made up from the data". That means something from the logical row, that the users use. Usually they know precisely what identifies their data, such as a short name.

- It is not manufactured by the system, such as a

RecordIDfield which is aGUIDorAUTOINCREMENT, which the user does not see. Such fields are physical pointers to records, not Keys to logical rows. Such fields are pre-Relational, pre-DBMS, 1960's Record Filing Systems, the very thing that RM superseded. But they are heavily promoted and marketed as "relational.

- It is not manufactured by the system, such as a

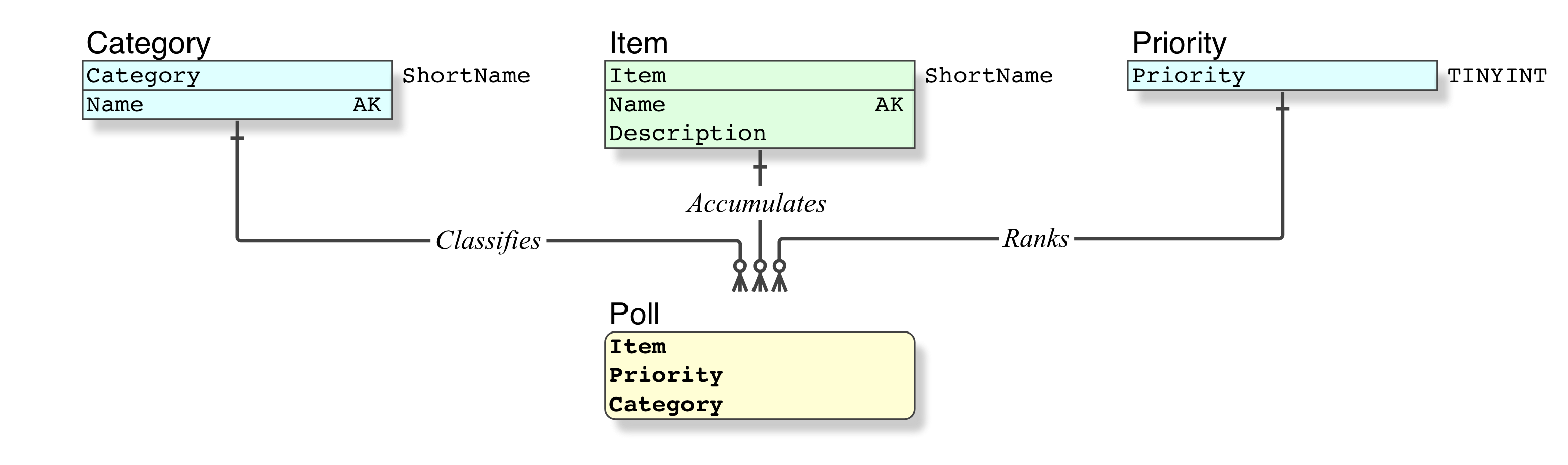

Relational Data Model • Initial

Looks like this.

All my data models are rendered in IDEF1X, the Standard for modelling Relational databases since 1993

My IDEF1X Introduction is essential reading for beginners.

Relational Data Model • Improved

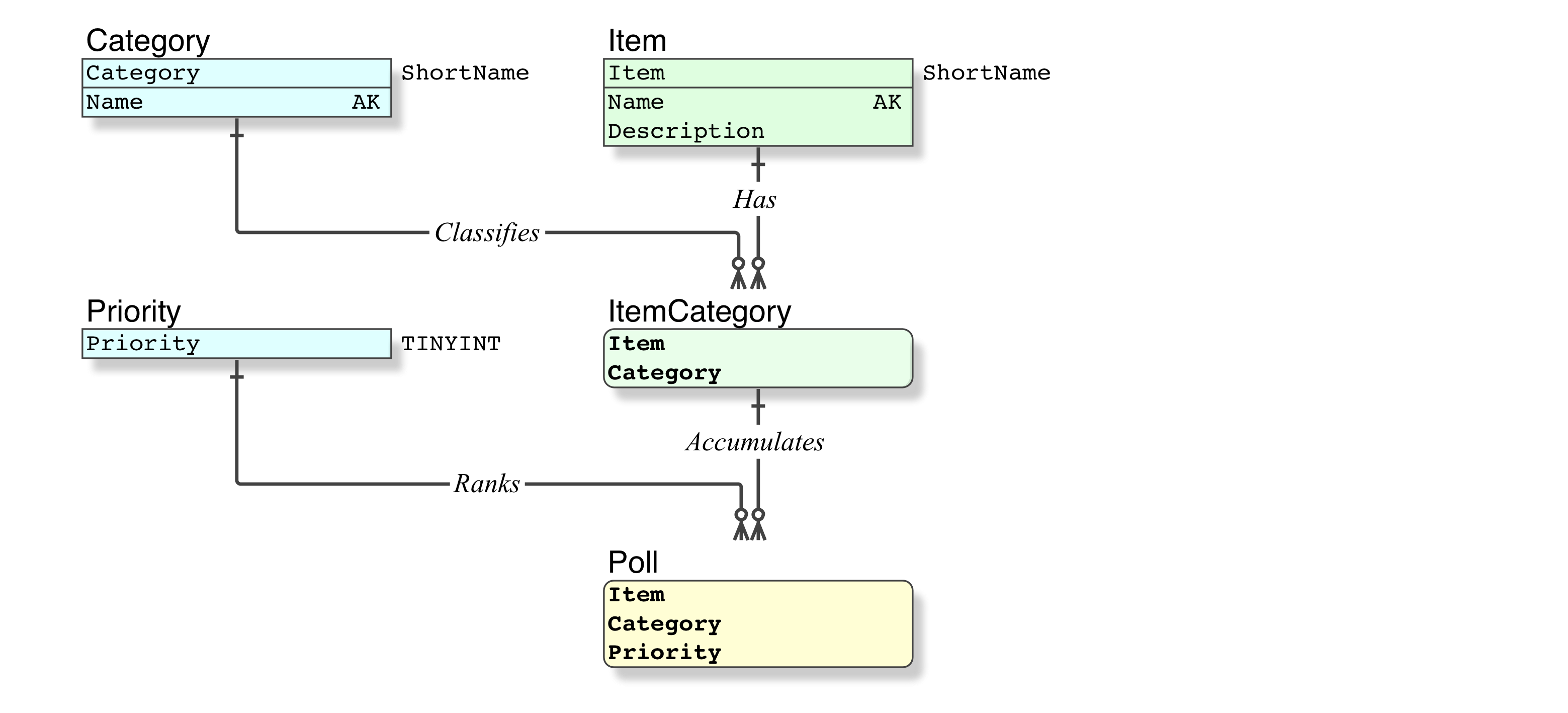

Ternary relations (aka three-way JOINs) are known to be a problem, indicating that further Normalisation is required. Codd teaches that every ternary relation can be reduced to two binary relations.

In your case, perhaps a Item has certain, not all, Categories. The above implements Polls of Items allowing all Categories for each Item, which is typical error in a ternary relation, which is why it requires further Normalisation. It is also the classic error in every RFS file.

The corrected model would therefore be to establish the Categories for each Item first as ItemCategory, your "item can have several numbers of category_x". And then to allow Polls on that constrained ItemCategory. Note, this level of constraining data is not possible in 1960' Record Filing Systems, in which the "key" is a fabricated id field:

Each ItemCategory is 1 Item

Each ItemCategory is 1 Category

Each Poll is 1 Priority

Each Poll is 1 ItemCategory

Your indices are now simple and straight-forward, no additional indices are required.

Likewise your query code will now be simple and straight-forward, and far less prone to bugs.

Please make sure that you learn about Subqueries. The Poll table supports any type of pivoting that may be required.

How to optimize an optimize MYSQL query that takes a lot of time

Instead of worrying about speeding up OPTIMIZE TABLE, let's get rid of the need for it.

PARTITION BY RANGE(TO_DAYS(...)) ...

Then DROP PARTITION nightly; this is much faster than using DELETE, and avoids the need for OPTIMIZE.

Be sure to have innodb_file_per_table=ON.

Also nightly, use REORGANIZE PARTITION to turn the future partition into tomorrow's partition and a new, empty, partition.

Details here: http://mysql.rjweb.org/doc.php/partitionmaint

Note that each PARTITION is effectively a separate table so DROP PARTITION is effectively a drop table.

There should be 10 partitions:

- 1 starter table to avoid the overhead of a glitch when partitioning by

DATETIME. - 7 daily partitions

- 1 extra day, so that there will be a full 7 day's worth.

- 1 empty

futurepartition just in case your nightly script fails to run.

Optimization techniques for select query on single table with ~2.25M rows?

Creating compound index on (lat, long) should help a lot.

However, right solution is to take a look at MySQL spatial extensions. Spatial support was specifically created to deal with two-dimensional data and queries against such data. If you create appropriate spatial indexes, your typical query performance should easily exceed performance of compound index on (lat, long).

Optimize select query on huge tables?

You can safely avoid nvl() from condition if there are no possibility of encountering null in test values like ('1','1','1') or ('2000','2000','2000'):

select

INDEX_COLUMN_A, INDEX_COLUMN_B, INDEX_COLUMN_C,

TABLE.*

from

TABLE

where

(INDEX_COLUMN_A, INDEX_COLUMN_B, INDEX_COLUMN_C)

in (

('1','1','1'),

('2','2','2'),

...,

('1000','1000','1000')

)

OR

(INDEX_COLUMN_A, INDEX_COLUMN_B, INDEX_COLUMN_C)

in (

('1001','1001','1001'),

('1002','1002','1002'),

...,

('2000','2000','2000')

)

or

...

Also, expression like a in (x1,x2) or a in (x3,x4) means ((a=x1 or a=x2) or (a=x3 or a=x4)). Brackets in such situation may be omitted without consequences: (a=x1 or a=x2 or a=x3 or a=x4) which may be shortened with in expression as a in (x1, x2, x3, x4). Therefore initial query (if there are no nulls in values to test for) are same as following:

select

INDEX_COLUMN_A, INDEX_COLUMN_B, INDEX_COLUMN_C,

TABLE.*

from

TABLE

where

(INDEX_COLUMN_A, INDEX_COLUMN_B, INDEX_COLUMN_C)

in (

('1','1','1'),

('2','2','2'),

...,

('1000','1000','1000')

...,

('1001','1001','1001'),

('1002','1002','1002'),

...,

('2000','2000','2000')

)

P.S.

a in (x,y,z) is just a shortcut for set of equality relations connected by or :((a=x) or (a=y or (a=z)) and null never equals null, so expression null in (x,y,z) never returns true regardless of x, y and z values. So if you really need to handle null values then expressions must be changed to something like nvl(a,'some_never_encountered_value') in (nvl('1', 'some_never_encountered_value'), nvl('2','some_never_encountered_value'),...). But at this case you can't use simple index on a table. It's possible to build functional index to handle such expressions, but this is a very different story.

P.P.S.

If columns contains numbers it must be tested against numbers: instead of ('1','1','1') you should use (1,1,1).

How do I optimize this MYSQL JOIN Query?

I would simply use a subquery in select clause for aggregation:

SELECT artist.*, country.*, (

SELECT COUNT(*)

FROM song

WHERE song.song_artist_id = artist.artist_id

) AS total_songs, (

SELECT SUM(song_plays)

FROM song

WHERE song.song_artist_id = artist.artist_id

) AS total_plays, (

SELECT COUNT(*)

FROM song

JOIN lyrics ON song.song_id = lyrics.lyrics_song_id

WHERE song.song_artist_id = artist.artist_id

) AS total_lyrics

FROM artist

LEFT JOIN country ON artist.artist_country_id = country.country_id

WHERE artist_status = 'enabled'

AND artist.artist_slug = :slug

In MySQL 8 or later you can use a lateral join:

SELECT artist.*, country.*, lj.*

FROM (artist LEFT JOIN country ON artist.artist_country_id = country.country_id), LATERAL (

SELECT COUNT(song.song_id) total_songs, SUM(song_plays) total_plays, COUNT(lyrics.lyrics_id) total_lyrics

FROM song

LEFT JOIN lyrics ON song.song_id = lyrics.lyrics_song_id

WHERE song.song_artist_id = artist.artist_id

) AS lj

WHERE artist_status = 'enabled'

AND artist.artist_slug = :slug

Related Topics

Check Users in a Security Group in SQL Server

SQL Loop Through Each Row in a Table

Rails: Using Jquery Tokeninput (Railscast #258) to Create New Entries

How to Replace Blank (Null ) Values with 0 for All Records

How to Get the Sum of All Column Values in the Last Row of a Resultset

Geometry and Geography Difference SQL Server 2008

Why Can't I Create a View Inside of a Begin ... End Block

Is Using Char as a Primary/Foreign Key a No No

Any Disadvantages to Bit Flags in Database Columns

How to Use MySQL Index Columns

Weighted Average in T-Sql (Like Excel's Sumproduct)

Postgres - Comparing Two Arrays

Efficient Time Series Querying in Postgres