Run several jobs parallelly and Efficiently

As Mark Setchell says: GNU Parallel.

find scripts/ -type f | parallel

If you insists on keeping 8 CPUs free:

find scripts/ -type f | parallel -j-8

But usually it is more efficient simply to use nice as that will give you all 48 cores when no one else needs them:

find scripts/ -type f | nice -n 15 parallel

To learn more:

- Watch the intro video for a quick introduction:

https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1 - Walk through the tutorial (man parallel_tutorial). You command line

with love you for it.

Jenkins - How to run two Jobs parallelly (1 FT Jobs and 1 Selenium Jobs) on same slave node

As for running the job on the same slave, you can use the option Restrict where this project can be run, assuming you have the jenkins slave configured in your setup.

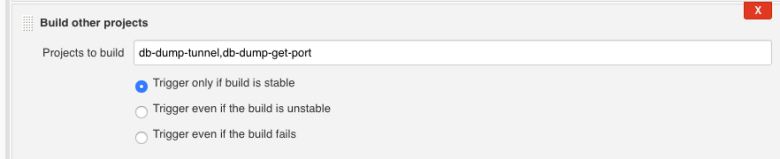

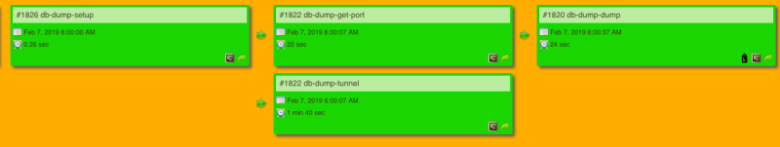

For running the jobs in parallel (are you trying to do this via Jenkinsfile or via freestyle jobs?). For jenkinsfile, you can use the parallel stages feature as described here. For freestyle jobs, I would suggest adding one more job (for example setup job) and use this job to trigger your two jobs at the same time. Here are few screenshots showing one of my pipeline triggering jobs in parallel.

Spring batch - running multiple jobs in parallel

I believe that you can. Since you are new in spring batch (just like me) I would recommend that you go through the domain language of a batch if you haven't done so already.

Then you may start by configuring your own asynchronous JobLauncher. For example:

@Bean

public JobLauncher jobLauncher() throws Exception

{

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(jobRepository);

jobLauncher.setTaskExecutor(new SimpleAsyncTaskExecutor());

jobLauncher.afterPropertiesSet();

return jobLauncher;

}

Pay special attention to SimpleAsyncTaskExecutor (the job repo can be autowired). This configuration will allow asynchronous execution as visualized next:

Compare it with the synchronous execution flow:

Maybe it would additionally help to quote the SimpleJobLauncher java doc:

Simple implementation of the JobLauncher interface. The Spring Core

TaskExecutor interface is used to launch a Job. This means that the

type of executor set is very important. If a SyncTaskExecutor is used,

then the job will be processed within the same thread that called the

launcher. Care should be taken to ensure any users of this class

understand fully whether or not the implementation of TaskExecutor

used will start tasks synchronously or asynchronously. The default

setting uses a synchronous task executor.

More details and configuration options - here.

At the end just create the jobs with different names and/or launch them with different parameter set. Naive example would be:

@Autowired

public JobBuilderFactory jobBuilderFactory;

public Job createJobA() {

return jobBuilderFactory.get("A.txt")

.incrementer(new RunIdIncrementer())

.flow(step1())

.next(step2())

.end()

.build();

}

public Job createJobB() {

return jobBuilderFactory.get("B.txt")

.incrementer(new RunIdIncrementer())

.flow(step1())

.next(step2())

.end()

.build();

}

Executing these jobs with your asynchronous job launcher will create two job instances that which will execute in parallel. This is just one option, that may or may not be suitable for your context.

Efficiency of running many more jobs than CPUs

Speed-wise, you're unlikely to get a performance boost spawning more threads than there are physical threads available unless your threads are spending a lot of time sleeping (in which case it gives your other threads an opportunity to execute). Note that thread sleeps can be implicit and hidden in I/O bound processes and when contending a lock.

It really depends on whether your threads are spending most of their time waiting for something (ex: more data to come from a server, for users to do something, for a file to update, to get access to a locked resource) or just going as fast as they can in parallel. If the latter case, using more threads than physically available will tend to slow you down. The only way having more threads than tasks can ever help throughput is when those threads waste time sleeping, yielding opportunities for other threads to do more while they sleep.

However, it might make things easier for you to just spawn all these tasks and let the operating system deal with the scheduling.

With vastly more threads, you could slow things down potentially (even in terms of throughput). It depends somewhat on how your scheduling and thread pools work and whether those threads spend time sleeping, but a thread is not necessarily a cheap thing to construct, and a context switch with that many threads can become more expensive than your own scheduling process which can have a lot more information about exactly what you want to do and when it's appropriate than the operating system who just sees a boatload of threads that need to be executed.

There's a reason why efficient libraries like Intel's Thread Building Blocks matches the number of threads in the pool to the physical hardware (no more, no less). It tends to be the most efficient route, but it's the most awkward to implement given the need for manual scheduling, work stealing, etc. So sometimes it can be convenient to just spawn a boatload of threads at once, but you typically don't do that as an optimization unless you're I/O bound as pointed out in the other answer and your threads are just spending most of their time sleeping and waiting for input.

If you have needs like this, the easiest way to get the most out of it is to find a good parallel processing library (ex: PPL, TBB, OMP, etc). Then you just write a parallel loop and let the library focus on how to most efficiently deal with the threads and to balance the load between them. With those kinds of cases, you focus on what tasks should do but not necessarily when they execute.

Run a total of N jobs, having M of them running in parallel at any time

You can write your own little scheduler, hiving them off to processors that are done with their current assignment; but at our centre we strongly recommend using gnu parallel, which has already implemented that with an xargs-like syntax.

So for instance, as above, you could do

parallel --max-procs 8 <<EOF

python run.py $arg1

python run.py $arg2

python run.py $arg3

..

EOF

Or, if you had your argument list in a file, you could do something like

cat args.list | parallel --max-procs 8 python run.py

Parallel running of several jobs in a python script

If you go this way, you're getting into windows shell programming, which nobody does. (I mean somebody does it, but they're an extremely small group.)

It would be simplest if you wrote a second python script that loops through the arguments that you want to pass to the second script, and calls a functoin with those arguments.

from subprocess import Popen

from os import mkdir

argfile = open('commandline.txt')

for number, line in enumerate(argfile):

newpath = 'scatter.%03i' % number

mkdir(newpath)

cmd = '../abc.py ' + line.strip()

print 'Running %r in %r' % (cmd, newpath)

Popen(cmd, shell=True, cwd=newpath)

This creates a directory, and runs your command as a separate process in that directory. Since it doesn't wait for the subprocess to finish before starting another, this gives the paralellism you want.

The in-series version just waits before it starts another subprocess.

Add one line at the end of the loop:

p = Popen(cmd, shell=True, cwd=newpath)

p.wait()

Related Topics

How to Fix 'Sudo: No Tty Present and No Askpass Program Specified' Error

How to Measure the Actual Memory Usage of an Application or Process

Bash While Read Loop Extremely Slow Compared to Cat, Why

Defining a Variable With or Without Export

How to Symlink a File in Linux

Use Expect in a Bash Script to Provide a Password to an Ssh Command

How Do the Likely/Unlikely Macros in the Linux Kernel Work and What Is Their Benefit

How to Set the Environmental Variable Ld_Library_Path in Linux

How to 'Grep' a Continuous Stream

Redirect All Output to File in Bash

How to Cat ≪≪Eof ≫≫ a File Containing Code

How to Pass the Password to Su/Sudo/Ssh Without Overriding the Tty

Deploying Yesod to Heroku, Can't Build Statically

Fastest Way to Find Lines of a File from Another Larger File in Bash

How to Upgrade Glibc from Version 2.12 to 2.14 on Centos